Swift Regex Deep Dive

iOS MacOur introductory guide to Swift Regex. Learn regular expressions in Swift including RegexBuilder examples and strongly-typed captures.

Editor’s note: This is the fourth post in our series on building an iOS app in Rust.

Welcome to Part 4 of the “Let’s Build an iOS App in Rust” series. We’ll continue to build on the tools and techniques we explored in the first three parts:

In this post, we’re going to create a basic view model in Rust. This will be our first foray into multithreading, because the view model needs to be accessible from Swift on the main thread (we’ll use it to implement a UITableViewDataSource), but we’re going to send updates from background threads managed entirely by Rust.

As always, the code for this post is available on GitHub. I will not go over every line of code in this post, but you can download and run the sample project from there. It may also be helpful to look at some of the code I do point out in context.

The goal for this post is a view model, written in Rust, that is:

This sets us up perfectly to introduce data races, a particularly insidious kind of race condition. A data race occurs when two or more threads have simulatenous read or write access to a memory location and at least one of the threads is trying to write to that location. Data races introduce undefined behavior—maybe your app crashes, maybe it works correctly, maybe it continues to run—but does the wrong thing at runtime. Bad news.

In a pure Rust program (assuming no unsafe code), data races do not exist, because Rust’s ownership system will enforce rules that prevent them at compile time. Because we’re going to be working across two different languages, we’re not going to be able to statically guarantee correctness like we could in a pure Rust program. We need a strategy to help make sure we do the right thing. I’ll propose two different strategies for managing this complexity.

The first strategy is to avoid the data race by giving Swift its own copy of

the view model every time we make a change from Rust. This will avoid the data

race by avoiding sharing altogether: whenever a Rust background thread updates

the view model, it will take a snapshot of the new view model state and give

that back to Swift. Swift will be responsible for destroying all the view model

copies it gets, but we won’t have to worry about concurrent access, since Swift

owns (and holds the only reference to) its copies. Dropbox uses this technique in their

cross-platform C++ libraries.

I don’t know about you, but my initial reaction to that strategy was one of…

incredulity. Isn’t in incredibly expensive to create a new snapshot of the view

model state every time you change it? As is always the case with performance

concerns, the answer is “it depends.” If your view model state is “small” (for

some definition of small), it’s probably fine. If your view model state is big

but you can figure out a way to make its in-memory representation small, it’s

probably fine then, too. (An example of this would be if your view model is

holding an array of images—you don’t need to copy all the image data every

time; you can just make more references to the same unchanging data.)

The second strategy we’ll examine is to truly share a single instance of a view

model between the two languages by giving Rust a way to perform updates on the

iOS main thread. This will be make use of some relatively advanced techniques

on the Rust side, so we’ll explore this strategy in the next post in this series.

Let’s look at the view model implementation on the Rust side. Here is a

subset of the code (you can see the full source on

GitHub):

#[derive(Clone)]

pub struct ViewModel {

values: Vec<String>,

}

impl ViewModel {

pub fn len(&self) -> usize {

self.values.len()

}

pub fn value_at_index(&self, index: usize) -> &str {

&self.values[index]

}

}

Our view model is very simple: we have a vector of Strings that we’ll

display in a table view on the iOS side. We’ll have a number of Rust threads

that modify values in various ways (appending/deleting/modifying

existing).

There are a couple of things to note about this Rust code:

ViewModel struct derives an implementation for the Clone trait. This means we can call the .clone() method on a ViewModel instance, and we’ll get back a copy that is made by calling .clone() on each of ViewModel’s fields (the values vector, in this case).value_at_index method returns a reference to memory inside the view model itself. This is fine because Swift is going to be the sole owner of ViewModel instances. The &str references returned by value_at_index will continue to be valid until the ViewModel is destroyed.Ok, so if this is the view model we’ll give to Swift, what does the “giving”?

We need another type, a ViewModelHandle, and a Rust trait (very similar to a

Swift protocol) defining how we’ll provide update notifications back to iOS:

pub trait ViewModelObserver: Send + 'static {

fn inserted_item(&self, view_model: ViewModel, index: usize);

fn removed_item(&self, view_model: ViewModel, index: usize);

fn modified_item(&self, view_model: ViewModel, index: usize);

}

// TODO: What should we store in the ViewModelHandle?

pub struct ViewModelHandle;

impl ViewModelHandle {

pub fn new<Observer>(num_threads: usize, observer: Observer) -> (ViewModelHandle, ViewModel)

where Observer: ViewModelObserver

{

// TODO: Create a ViewModel and a ViewModelHandle.

// Start up `num_threads` threads that will modify the ViewModel

// and notify `observer` when they do so.

}

}

First let’s look at ViewModelObserver. It’s a trait that requires

implementors to provide three methods: we’ll call inserted_item whenever

we update the view model by appending a new value, removed_item whenever

we update the view model by removing a value, and modified_item whenever

we update the view model by changing an existing value. In all three cases,

we’ll pass back to Swift a copy of the the current ViewModel (after the

change we made) and the index of the value that was added/removed/changed.

One curious thing about the ViewModelObserver is that it requires its

implementors to also be Send + 'static. Send is a special trait: it

doesn’t have any method requirements; instead, it’s used by the Rust compiler

to indicate that a type can be transferred from one thread to another. We need

this requirement because we’re going to be calling methods on the observer from

Rust background threads. 'static is a requirement on the lifetime of the

type conforming to ViewModelObserver: it cannot have references to other

Rust objects that could possibly be deallocated while the thread is running

(i.e., non-'static references).

Before we can figure out how to flesh out ViewModelHandle, let’s think about

what we’re going to do on the Rust background threads. Every thread needs a

reference to the same view model and observer (so it can make changes and notify

the observer). Since all threads want write access to the same memory, we must

protect that memory somehow. Let’s define a new struct, Inner, that contains

the memory we want to share:

struct Inner<Observer: ViewModelObserver> {

view_model: ViewModel,

observer: Observer,

}

To create a safe, shared reference to a single instance of Inner, we need two

types from the Rust standard library:

Arc<T> is an “atomically reference counted” T. Arc provides a way to wrap up an instance of a type to be shared across multiple threads. If you call .clone() on an Arc<T>, you increase the reference count but don’t actually clone the contained instance. The T that is contained in the Arc will be deallocated once all the references to it go out of scope.Mutex<T> is a mutually exclusive lock protecting a value of type T. In Rust, a mutex isn’t standalone; it is always protecting something. In order to read or write the protected T, one must first call .lock() on the mutex to get back a MutexGuard; the mutex will unlock when the MutexGuard goes out of scope.(Note: Rust’s Arc type is completely unrelated to Swift and Objective-C’s

ARC system. They happen to share the same short name, but Rust’s “atomically

reference counted” type is a part of its standard library, while Swift’s

“automatic reference counting” is part of the compiler.)

We’ll combine these and create an Arc<Mutex<Inner>>; i.e., an atomically

referenced counted mutex containing an Inner. We’ll give all our background

thread workers a clone of this value (i.e., they all get references to the

same Mutex), an ID number they can use when updating the view model strings,

and a way to determine when they should stop executing. Let’s combine these

into a ThreadWorker struct:

struct ThreadWorker<Observer: ViewModelObserver> {

inner: Arc<Mutex<Inner<Observer>>>,

thread_id: usize,

shutdown: mpsc::Receiver<()>,

}

What is an mpsc::Receiver<()>? It’s the receiving end of a channel

that lets us communicate safely between threads. In this case, we’re not actually

going to send any data at all; instead, we’ll use this to implement a

should_shutdown method on ThreadWorker that will return true if the

sending end of the channel has been disconnected (i.e., because it’s been

deallocated):

impl<Observer: ViewModelObserver> ThreadWorker<Observer> {

fn should_shutdown(&self) -> bool {

match self.shutdown.try_recv() {

// Disconnected means the sending end is gone - we should stop.

Err(mpsc::TryRecvError::Disconnected) => true,

// Empty means the sending end is still alive - we should keep running.

Err(mpsc::TryRecvError::Empty) => false,

// We should never actually receive data - panic if we do.

Ok(()) => unreachable!("thread worker channels should not be used directly"),

}

}

}

The three things we want to do from a ThreadWorker are to add a value, remove

a valu, or modify a value. The implementations for all three are similar, so

we’ll look at adding a value here and you can see the other two on

GitHub:

impl<Observer: ViewModelObserver> ThreadWorker<Observer> {

fn add_new_item(&self) {

// Lock the mutex. (unwrap() asserts that the mutex isn't "poisoned", which

// occurs if another thread panics while holding the mutex.)

let mut inner = self.inner.lock().unwrap();

// With the mutex locked, push a string containing our thread_id onto

// the view model.

inner.view_model.push(format!("rust-thread-{}", self.thread_id));

// Still with the mutex locked, notify the observer that we inserted an item,

// giving them a clone of the current view model state and the index of the

// item we added.

inner.observer.inserted_item(inner.view_model.clone(), inner.view_model.len() - 1);

}

}

When we lock a mutex, we get back a MutexGuard. This is a

powerful type: it gives us write access to the contained value (an Inner, in

our case), and it automatically unlocks the mutex when the guard falls out of

scope (when add_new_item returns). We’ll discuss why we continue to hold the

mutex locked while we call back to the observer toward the end of this post.

We can now implement the “main” function for our thread workers (some non-essential

details are omitted):

impl<Observer: ViewModelObserver> ThreadWorker<Observer> {

fn main(&self) {

// add a new item immediately

self.add_new_item();

// loop "forever"

loop {

// sleep for some random-ish amount of time (1-4 seconds)

thread::sleep(...);

// check and see if we should stop

if self.should_shutdown() {

println!("thread {} exiting", self.thread_id);

return;

}

// 20% of the time, add a new item.

// 10% of the time, remove an item.

// 70% of the time, modify an existing item.

match random_number_between_1_and_10() {

0 | 1 => self.add_new_item(),

2 => self.remove_existing_item(),

_ => self.modify_existing_item(),

}

}

}

}

So every thread worker we start up with first add an item, then go into an

infinite loop where they sleep, shutdown if they should, and then modify the

view model randomly in some way.

Let’s go back and fill in ViewModelHandle. We know our handle will need to

hold on to the sending side of one channel for each background thread. It turns

out that’s the only thing it needs to hold on to: we can create the shared

view model and give it to all the background threads, after which the handle

itself never needs it.

// ViewModelHandle holds a vector of senders corresponding to each ThreadWorker's receiver.

pub struct ViewModelHandle(Vec<mpsc::Sender<()>>);

impl ViewModelHandle {

pub fn new<Observer>(num_threads: usize, observer: Observer) -> (ViewModelHandle, ViewModel)

where Observer: ViewModelObserver

{

// Create a new, empty view model.

let starting_vm = ViewModel::new();

// Wrap our view model and observer into a mutex and put that inside of an Arc.

let inner = Arc::new(Mutex::new(Inner::new(starting_vm.clone(), observer)));

// Create a vector to hold all the senders.

let mut worker_channels = Vec::with_capacity(num_threads);

for i in 0..num_threads {

// Create a new channel pair and stash away the sending side.

let (tx, rx) = mpsc::channel();

worker_channels.push(tx);

// Create a new thread worker, giving it a reference to the mutex-protected

// Inner we created up above.

let worker = ThreadWorker::new(i, inner.clone(), rx);

// Spawn a new thread which runs "main" on the worker.

thread::spawn(move || worker.main());

}

// Send back the handle and the initial view model state.

(ViewModelHandle(worker_channels), starting_vm)

}

}

For the sake of the length of this post, I’m going to skip most of the C

interface and the Swift code the wraps, it as they are very similar

to what we discussed in Part 3. One new feature is that we can now

use rusty-cheddar to automatically generate the C header from

our Rust code (including copying doc comments!), which is fantastic both from the

“reduced manual work” and the “reduced chance of error due to the header and the

code getting out of sync” points of view.

The one bit of new interface technique I want to cover deals with the

view_model_observer struct that Swift fills in and gives to Rust. This is the

C interface:

struct view_model_observer {

void* user;

void (*destroy_user)(void* user);

void (*inserted_item)(void* user, view_model* view_model, size_t index);

void (*removed_item)(void* user, view_model* view_model, size_t index);

void (*modified_item)(void* user, view_model* view_model, size_t index);

};

user and destroy_user are straight out of Part 3: we’re going to give Rust

some Swift object and a way to deallocate it when it’s finished. What do we

pass here though? We have a ViewModelHandle class in Swift that wraps up our

Rust view model handle. Remember that to pass a Swift object as a raw C

pointer, we have to convert it via Unmanaged<T>, which has

takeRetainedValue and takeUnretainedValue methods for dealing with the

Swift reference count. But both of these are problematic:

takeRetainedValue to give the Rust ViewModelHandle a strongViewModelHandle, that creates a strong referencetakeUnretainedValue to give the Rust ViewModelHandle anViewModelHandle, we risk RustThe typical solution to this in Swift apps would be to pass a weak reference,

but we can’t do that directly across a C interface. Instead, we can create a

Swift wrapper object that holds a weak reference to our Swift ViewModelHandle

and give Rust a strong reference to that wrapper object:

final class WeakHolder<T: AnyObject> {

weak var object: T?

init(_ object: T) {

self.object = object

}

}

final class ViewModelHandle {

// The last-known state of the view model. Should only be read or written from the

// iOS main thread (used to power a table view).

var viewModel: ViewModel!

init(numberOfWorkerThreads: Int) {

// Create a pointer with a +1 reference count to a WeakHolder<ViewModelHandle>

let weakSelf = UnsafeMutablePointer<Void>(Unmanaged.passRetained(WeakHolder(self)).toOpaque())

// Pass it into the Rust layer

let observer = view_model_observer(user: weakSelf,

destroy_user: freeViewModelHandle,

inserted_item: handleInsertedItem,

removed_item: handleRemovedItem,

modified_item: handleModifiedItem)

...

}

}

We’ll also write some Swift glue code to translate the C callbacks we get into a proper Swift protocol that dispatch back onto the iOS main queue:

protocol ViewModelHandleObserver: class {

func viewModelHandle(handle: ViewModelHandle, insertedItemAtIndex index: Int)

func viewModelHandle(handle: ViewModelHandle, removedItemAtIndex index: Int)

func viewModelHandle(handle: ViewModelHandle, modifiedItemAtIndex index: Int)

}

private func handleInsertedItem(ptr: UnsafeMutablePointer<Void>, viewModel: COpaquePointer, index: Int) {

autoreleasepool {

// Convert the pointer we get back into a WeakHolder<ViewModelHandle>

let handle = Unmanaged<WeakHolder<ViewModelHandle>>.fromOpaque(COpaquePointer(ptr)).takeUnretainedValue()

// Jump onto the main thread

dispatch_async(dispatch_get_main_queue()) {

// "Strongify" the reference to our ViewModelHandle (or return immediately if it's gone)

guard let handle = handle.object else { return }

// Update our viewModel property to the latest version we've been given

handle.viewModel = ViewModel(viewModel)

// Notify our observer about what changed

handle.observer?.viewModelHandle(handle, insertedItemAtIndex: index)

}

}

}

// handleRemovedItem and handleModifiedItem are nearly identical

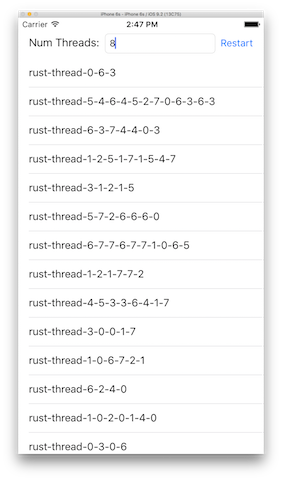

We’ll let the user decide how many Rust threads should be created, and give them a way to

restart the entire process with a different number (nicely giving us a way to

verify that all of our cleanup code runs successfully). Each thread can insert

rows in the table view with the contents rust-thread-$THREAD_ID, and when

they modify a row they append -$THREAD_ID to whichever row they randomly

selected. After running for a few minutes with eight threads, this is what I get

(you’ll see different results):

There is no question that it could be very expensive to make a full copy of the entire view model state every time it’s changed. In the next post, we’ll explore

a completely different strategy to avoid that, but first let’s discuss some

intricacies of this one. A few natural questions would be:

The answer to the first question is “yes.” We could avoid copying the whole

view model every time, and there are lots of different ways we could accomplish

that. For example, we could send Swift the single value that changed each time,

and let it update its own copy itself. That would definitely be more

performant, but would have some cost in complexity—we’d need to maintain

code to update the view model in two different languages. This would also get

considerably trickier as our view model became more than just a list of

strings.

The answer to questions two and three are closely related. The mutex around our

Inner value protects us from data races; however, calling the observer

without the mutex locked or not giving copies of the data would both introduce

a different race condition that would lead to everyone’s favorite iOS

exception (courtesy of UITableView): “The number of rows contained in an

existing section after the update (…) must be equal to the number of rows

contained in that section before the update (…), plus or minus the number of

rows inserted or deleted from that section (… inserted, … deleted) and plus

or minus the number of rows moved into or out of that section (… moved in,

… moved out).”

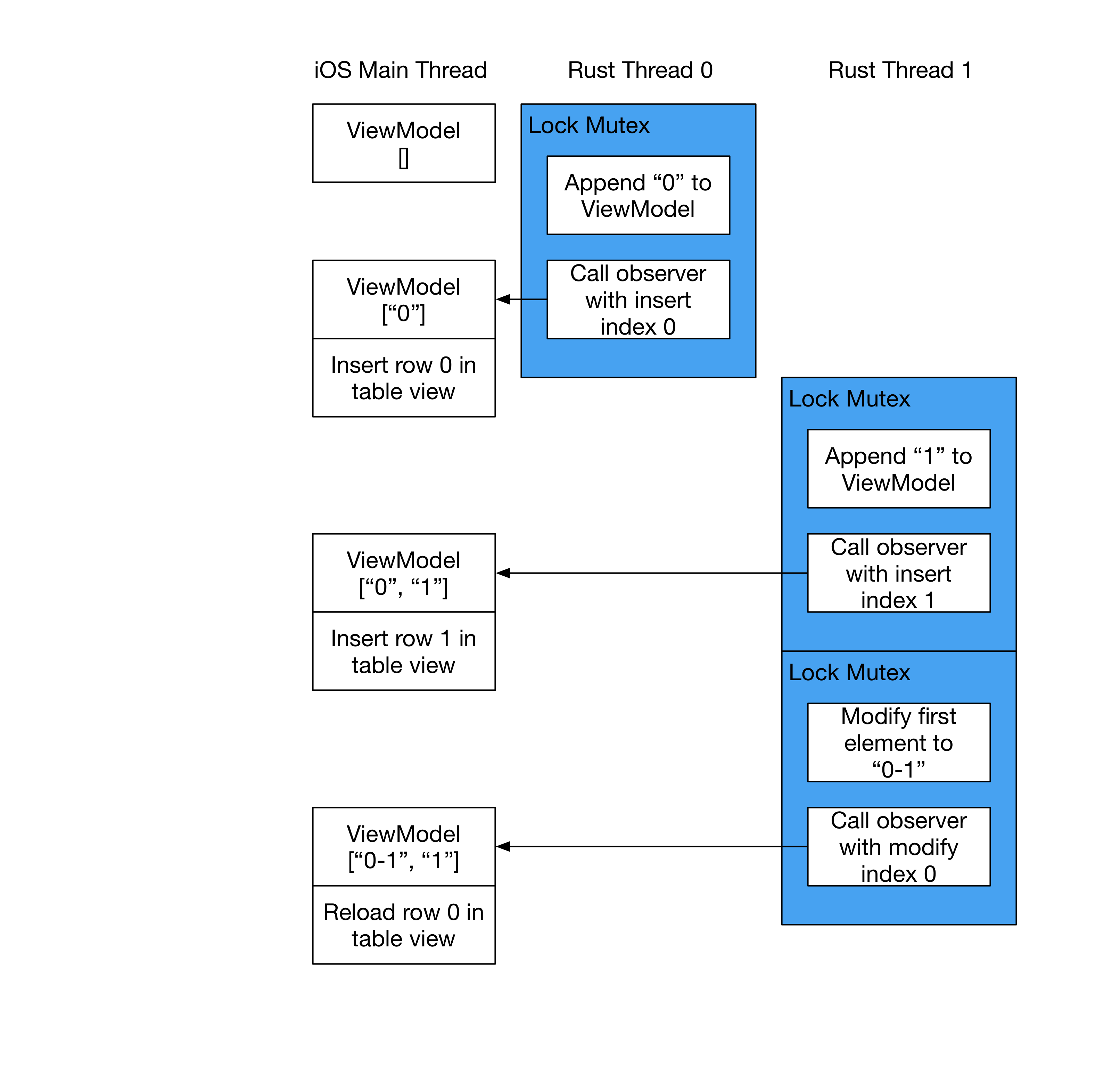

Consider what happens the way we’ve written the app. The following diagram

shows the flow of view model updates with two background Rust threads, with

time moving from top to bottom:

Whenever a background thread obtains the lock, it modifies the view model and

sends the updated state to the iOS main thread (via the observer callbacks).

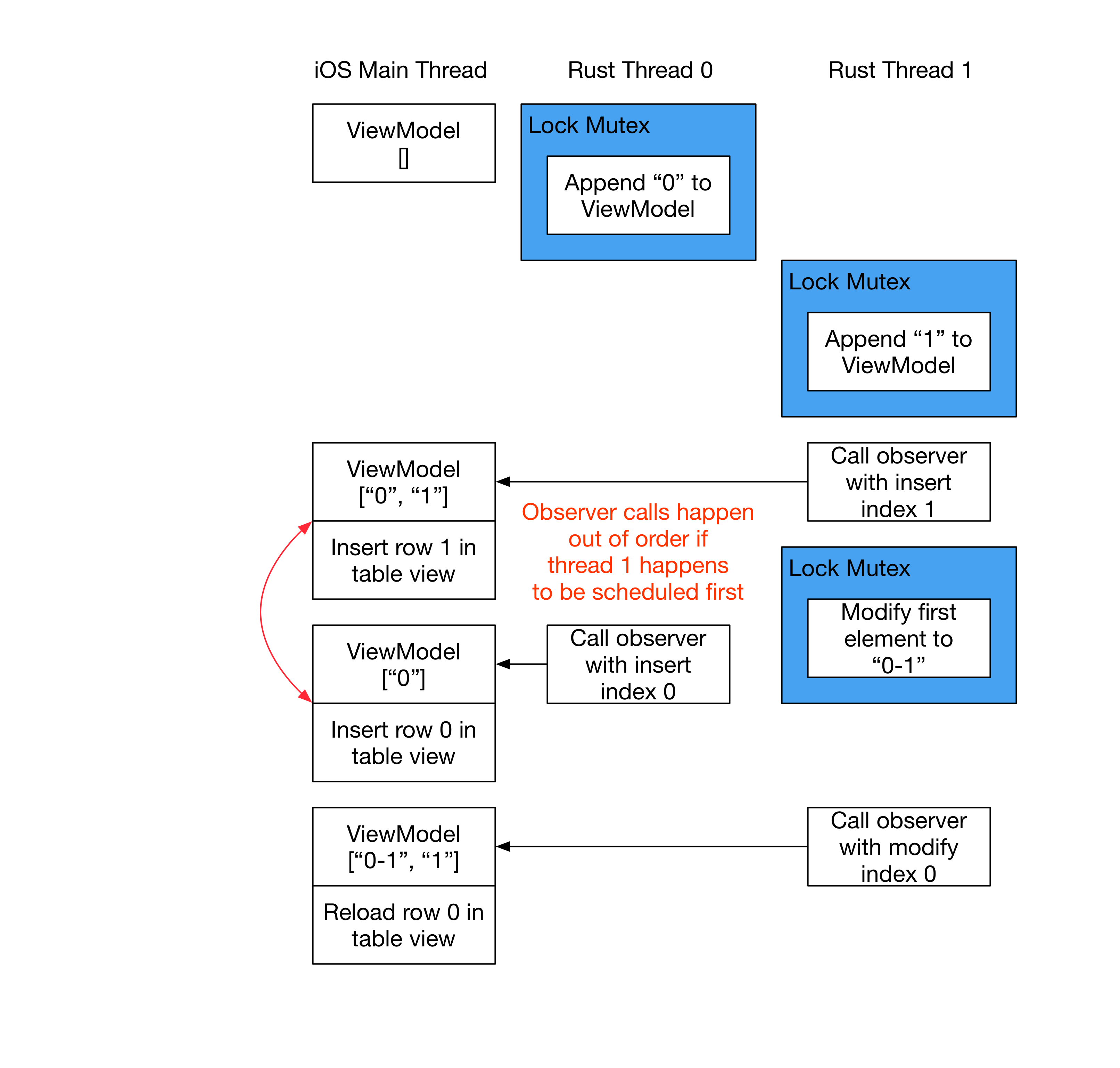

Consider what could happen if we unlocked the mutex after modifying the view

model but before calling the observer:

If we unlock the mutex immediately after thread 0 modifies the view model, it’s

possible the OS could schedule thread 1 to start immediately, lock the mutex,

call the observer and then finally go back to executing thread 0. If that

happens, the iOS main thread will get out-of-order view model updates, leading

to the table view exception we all hate.

An almost identical issue occurs if we don’t send updated view models as part

of the observer messages but instead provide a way for iOS to ask Rust to lock

the mutex and inspect the current value of the view model. We could get an

“inserted row 0” callback from thread 0, then thread 1 could lock and update

the view model (inserting another row), then finally the main thread would

run again. It would expect that row 0 was just inserted, but it would see a

view model with two rows in it. Again, we’d get the table view exception.

It is generally unwise for a library to call back into other code while holding

a mutex locked, as that opens the door for deadlock if the “other code” (Swift,

in our case) calls back into the library again in some way that attempts to

lock the same mutex. We don’t have that problem here, though, because we don’t

expose any functions to Swift that try to lock the mutex—only the background

worker threads have access to it.

We finally have a working (albeit silly) iOS app that’s driven by Rust! In the

next post, we’ll recreate this same application using a different technique

that doesn’t need to make a full copy of the full view model when it’s changed

(and actually, doesn’t even need a mutex!). Stay tuned.

Thanks to Steve Klabnik for reviewing a draft of this post.

Our introductory guide to Swift Regex. Learn regular expressions in Swift including RegexBuilder examples and strongly-typed captures.

The Combine framework in Swift is a powerful declarative API for the asynchronous processing of values over time. It takes full advantage of Swift...

SwiftUI has changed a great many things about how developers create applications for iOS, and not just in the way we lay out our...