Replacing Myself: Writing Unit Tests with ChatGPT

Leveling UpThe Bot that’s got everyone talking The science-fiction future is among us as we find ourselves on the precipice of an AI revolution. As...

TensorFlow kicked off their 3rd annual summit with a lot of new developments and releases. We have new updates on almost every aspect of TensorFlow. If you are new to TensorFlow, it is an open source collection of libraries and tools from Google for machine learning task. Much like Create ML and Core ML on iOS as discussed in a prior blog post, you can create and deploy models to the server, device, and even the browser. Unlike Create ML, TensorFlow is a lower level tool that requires knowledge of processing data and building neural networks to get working. Outside of Google, companies like Airbnb, PayPal, and Twitter are all using TensorFlow in their production environments.

TensorFlow boasts many improvements and increased speeds. They simplified the APIs by removing deprecated code.

Speed improvements we are seeing

| Speed Increase | Operation Type | Device |

|---|---|---|

| 1.8x | training | Tesla V100 GPU |

| 1.6x | training | Google TPU v2 |

| 3.3x | inference | Intel Skylake CPU |

Tensorflow is also making a shift with their high-level API by moving to Keras instead of their built-in high-level API. As mentioned in my prior blog post, Keras is a fantastic choice for a front end as you can swap out to platforms like PlaidML to train on AMD cards or use TensorFlow when training on an NVIDIA card. Although scalability is one issue, Keras suffers. In TensorFlow 2.0, Keras has been optimized for TensorFlow to provide more power and scalability to ‘> 1 exaflops’. One important thing to note to get this power you do need to pull in Keras from tf.keras instead of the standalone Keras package.

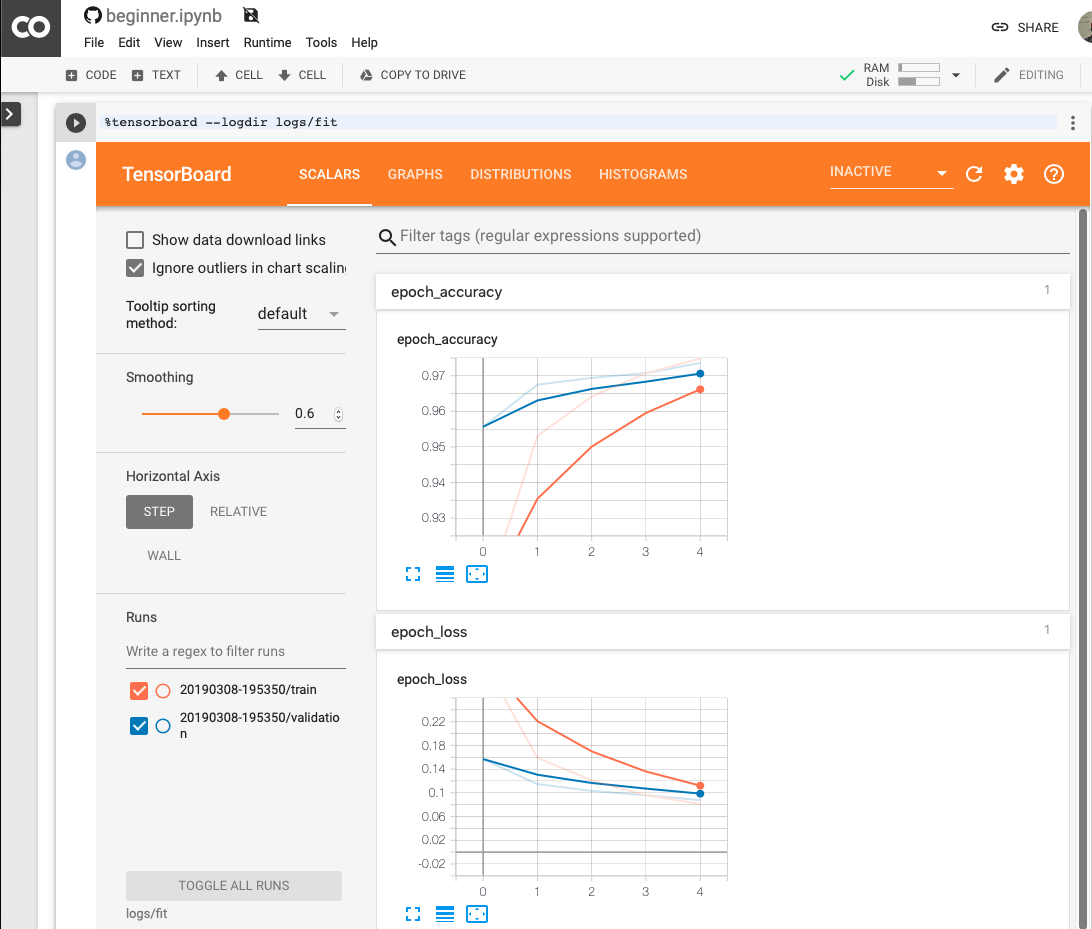

Some other features worth mention TensorBoard is now viewable directly in Jupyter Notebooks or Colab. As well their built-in data sets continue to grow.

With TensorFlow 2.0 all code runs in eager execution, meaning every python command get executed immediately. This change improves debugging and makes it easy to convert models into graphs for easy deployment.

The team also has improved error handling, now giving you the line and file of the error so your larger projects won’t get stuck in debugging. Currently when you get an error, while you get the function in question, the actual line and filename is not provided.

As of now TensorFlow 2.0 is available in an Alpha release. They are expecting the RC to ship in spring, with the full release set for Q2 of 2019. Thanks to Google’s hard work there are converter scripts available. Google has said to be using these internally on their projects and adding to the scripts as problems come up. The converter scripts make use of the new 1.x compatibility module. This module holds some of the deprecated functions so that large applications can continue to function. The team at TensorFlow notes the converter does not update the styling to be idiomatic with 2.0 but keeps the code running in the new version.

For those new to TFX, this is TensorFlow’s end-to-end solution that covers you from the start of your data pipeline, all the way to serving your models and logging results. TFX is what makes TensorFlow stand out from other machine learning libraries, like PlaidML or Caffe.

Up till now, TFX was just a collection of libraries you would need to stitch together to make into a full solution. However, with the TensorFlow 2.0, the team has open sourced their large “horizontal” layers to make a complete solution available.

These new layers include:

While all of this is exciting probably the most exciting is the Pipeline storage called Metadata store. Metadata store allows your cluster to have context between runs. Over time that allows you to have great insight on runs and experiments. You can also carry-over-state and re-use previously computed outputs to save time when retraining or making small changes.

The new Edge TPU brings fast inferencing to any device. Coral has is making several products including a full dev board that I like to call the Rasberry Pie of ML. As well they have made a USB accelerator so you can easily speed up your existing boards with this new processor.

Also in the works is a small PCI-E Accelerator and System-on-Module pluggable board.

TensorFlow Light is a stripped down runtime so that you can have on device machine learning for Android, iOS, Raspberry Pis, and now the new Edge TPU.

The only thing to note with the new version of TF Light is improved inference speeds.

| Device | Speed | Improvement |

|---|---|---|

| CPU | 124ms | Baseline |

| CPU /w Quantization | 64ms | 1.9x |

| GPU | 16ms | 7.7x (OpenGL and Metal) |

| Edge TPU | 2ms | 62x |

The JavaScript version of TensorFlow has officially hit 1.0. This new launch includes off the shelf models for image, text, and audio.

You can now use TensorFlow.js to deploy your models to the browser, Node.js, Electron Desktop Apps, and even Mobile Native apps.

They are boasting a 9x inference speed up from last year.

One of the TensorFlow projects I’m most excited about has now hit v0.2. With this release, they say Swift for TensorFlow is now ready for users to experiment with and try out, although there are still some bugs so you might want to hold off on any production releases until we get a bit further in.

fast.ai is writing a new course on Swift for TensorFlow that should be out soon to help bring you up to speed quickly on how you can be writing your ML code using Swift.

TensorFlow has done a great job building its community and has a lot to update on all the things they have going on.

With TensorFlow ever going it can be hard to know where to jump into and contribute. They have formed new special interest groups for the community so you can take part in the area of TensorFlow you care about the most. These include:

To help get more people into machine learning and TensorFlow there are now two new courses to take:

With TensorFlow v2.0, the team paired up with devpost to create a hackathon with $150k in prizes. Check out their page for all the details and how to submit for a chance to win.

TensorFlow is partnering with O’Reilly Media to create a week-long conference that is community focused where people all over the TensorFlow ecosystem can come together, show off, and learn from each other.

This year the conference is scheduled to take place Oct 28-31 in Santa Clara, CA. A call for papers is currently open until April 23, with a focus on real-world experiences and innovative ideas. Find more info at tensorflow.world or on twitter @TensorFlowWorld

There is still so much to talk about with all these new products and version. I highly recommend checking out TensorFlows YouTube channel for all of their session talks, as well as the new design TensorFlow site for all the goodies to be found in TensforFlow v2.0. You can get started with it today by running the following command.

pip install tensorflow==2.0.0-alpha0

The Bot that’s got everyone talking The science-fiction future is among us as we find ourselves on the precipice of an AI revolution. As...

Big Nerd Ranch is chock-full of incredibly talented people. Today, we’re starting a series, Tell Our BNR Story, where folks within our industry share...

Writing documentation is fun—really, really fun. I know some engineers may disagree with me, but as a technical writer, creating quality documentation that will...