The post From Punched Cards to Prompts appeared first on Big Nerd Ranch.

]]>When computer programming was young, code was punched into cards. That is, holes were punched into a piece of cardboard in a format that a computer could interpret as the switching of bits–before using punched cards, programmers had to flip bit switches by hand. A complete program consisted of an ordered deck of cards to be fed into a computer one card at a time. As you can imagine, this task required a tremendous amount of mental overhead because time on a computer was limited and errors in the program were disastrous. These encumbrances forced programmers to be efficient and accurate with their solutions. Of course this also meant that programmers could account for each single line of code.

Today, a lot of the responsibilities with writing efficient and accurate code are delegated to integrated developing environments and using code written by other developers. Utilizing these resources does come with a hefty price, though. The programmer now must keep up with the latest developments in tools and practices in order to stay current with changes in the field. This task also requires a tremendous amount of overhead due to constantly refactoring code and mitigating dependencies when changes break the build. Worst, that once intimate relationship between the programmer and the machine has been diluted by abstractions, dependencies, trends and conflicting opinions on what is the “right” way to write software.

A Paradigm Shift in Software Development

With the incorporation of AI into the developer’s workflow, there is an opportunity to offload the tedious tasks of code maintenance and dependency management to the machine. One can easily imagine companies hiring teams of Prompt Engineers while retaining a handful of Software Developers to review the generated code before it is checked into the codebase. Before long, there will no longer be a need for generated code to be reviewed by a person. After all, when was the last time you reviewed the machine code that was generated by a compiler?

So where does this leave the software developer?

Down the rabbit hole with Studio Bot

In the canary version of Android Studio, we are introduced to a new feature: Studio Bot. With Studio Bot a developer has a virtual expert on demand to write, analyze, and explain code. I decided to take Studio Bot through a different journey than what Google has presented. Instead of walking through the usual tasks of writing Kotlin, converting XML layouts to Jetpack Compose and so on, I take Studio Bot down the murky path of C++ and Assembly Language development.

Searching for improvements

To start off, I wanted to see what Studio Bot would generate with very little context.

prompt:

Create a C++ class called EyeSeeU.

response:

Sure, here is the C++ class called EyeSeeU:

class EyeSeeU {

public:

EyeSeeU() {}

void see(const std::string& image) {

// TODO: Implement this function to read an image and process it.

}

void speak(const std::string& message) {

// TODO: Implement this function to speak a message.

}

private:

// TODO: Add any private members or methods here.

};

This is just a basic class declaration. You will need to implement the see() and speak() methods to make it work.

So Studio Bot doesn’t suggest to separate the `EyeSeU’s` declaration from its implementation. That can easily be remedied by hand or with a code snippet generator. Let’s have Studio Bot write something more interesting.

prompt:

Create a method in EyeSeeU called just_the_facs that computes the factorial of a number.

response:

Sure, here is the just_the_facs method in the EyeSeeU class:

int EyeSeeU::just_the_facs(int number) {

int factorial = 1;

for (int i = 2; i <= number; i++) {

factorial *= i;

}

return factorial;

}

This method takes an integer number as an input and returns the factorial of that number. The factorial of a number is the product of all the positive integers less than or equal to that number.<blocked> Here is an example of how to use the just_the_facs method:

int main() {

EyeSeeU eye_see_u;

int number = 5;

int factorial = eye_see_u.just_the_facs(number);

std::cout << "The factorial of " << number << " is " << factorial << std::endl;

return 0;

}

This code will print the following output to the console: The factorial of 5 is 120

Studio Bot chose the imperative approach instead of using recursion. There’s no error handling included but to be fair, I didn’t ask for it. Let’s look for improvements.

prompt:

What modifications can be made to just_the_facs to make it execute most efficiently?

response:

Sure, here are some modifications that can be made to the just_the_facs method to make it execute more efficiently: * Use a long long instead of an int to store the factorial. This will prevent overflow for large numbers. * Use a while loop instead of a for loop. This will avoid the need to initialize the factorial variable. * Use a constexpr to define the factorial function. This will make the function compile-time constant, which can improve performance. Here is the modified code:

constexpr int EyeSeeU::just_the_facs(int number) {

long long factorial = 1;

while (i <= number) {

factorial *= i++;

}

return factorial;

}

This code will execute more efficiently than the original code, especially for large numbers.

Still no error handling but this modification does take compile time optimizations into account.

I tried a more complex request.

prompt:

Create a method in EyeSeeYou that multiplies two matrices.

response:

Sure, here is the code for a method in EyeSeeYou that multiplies two matrices:

class EyeSeeYou {

public:

static std::vector<std::vector<int>> multiplyMatrices(const std::vector<std::vector<int>>& matrix1, const std::vector<std::vector<int>>& matrix2) {

// Check if the matrices are compatible for multiplication.

if (matrix1[0].size() != matrix2.size()) {

throw std::invalid_argument("The matrices are not compatible for multiplication.");

}

// Create the output matrix.

std::vector<std::vector<int>> outputMatrix(matrix1.size(), std::vector<int>(matrix2[0].size()));

// Multiply each element of the first matrix with the corresponding element of the second matrix.

for (int i = 0; i < matrix1.size(); i++) {

for (int j = 0; j < matrix2[0].size(); j++) {

for (int k = 0; k < matrix1[0].size(); k++) {

outputMatrix[i][j] += matrix1[i][k] * matrix2[k][j];

}

}

}

// Return the output matrix.

return outputMatrix;

}

};

Here is an example of how to use the multiplyMatrices() method:

int main() {

// Create two matrices.

std::vector<std::vector<int>> matrix1 = {{1, 2}, {3, 4}};

std::vector<std::vector<int>> matrix2 = {{5, 6}, {7, 8}};

// Multiply the matrices.

std::vector<std::vector<int>> outputMatrix = EyeSeeYou::multiplyMatrices(matrix1, matrix2);

// Print the output matrix.

for (int i = 0; i < outputMatrix.size(); i++) {

for (int j = 0; j < outputMatrix[0].size(); j++) {

std::cout << outputMatrix[i][j] << " ";

}

std::cout << std::endl;

}

return 0;

}

This code will print the following output to the console:

19 22 43 50

I like that matrix1 and matrix2 are passed by const reference as they could be large vectors allocated on the heap. There’s also a check to make sure the matrices can be multiplied. And the comments are a really nice touch to the implementation. Let’s look for improvements.

prompt:

How can multiplyMatrices be modified to execute most efficiently?

response:

Sure, here are some ways to modify the multiplyMatrices() method to execute more efficiently: * Use a const reference for the first matrix. This will prevent the compiler from having to copy the matrix each time the method is called. * Use a static member function for the multiplyMatrices() method. This will prevent the compiler from having to create a new object each time the method is called. * Use a template function for the multiplyMatrices() method. This will allow the compiler to generate code that is specific to the data types of the matrices being multiplied.

Here is the modified code:

class EyeSeeYou {

public:

static std::vector<std::vector<int>> multiplyMatrices(const std::vector<int>& matrix1, const std::vector<int>& matrix2) {

// Check if the matrices are compatible for multiplication.

if (matrix1.size() != matrix2.size()) {

throw std::invalid_argument("The matrices are not compatible for multiplication.");

}

// Create the output matrix.

std::vector<std::vector<int>> outputMatrix(matrix1.size(), std::vector<int>(matrix2[0].size()));

// Multiply each element of the first matrix with the corresponding element of the second matrix.

for (int i = 0; i < matrix1.size(); i++) {

for (int j = 0; j < matrix2[0].size(); j++) {

for (int k = 0; k < matrix1[0].size(); k++) {

outputMatrix[i][j] += matrix1[i][k] * matrix2[k][j];

}

}

}

// Return the output matrix.

return outputMatrix;

}

};

The modified code is the same as the original although the optimizations are called out. I would have liked to see an example using a template function. Also, there is no suggestion of using parallel processing instructions like SIMD. It appears that Studio Bot wants to stay within the context of C++. Both C and C++ allow for inline assembly instructions and that would have been a significant performance improvement. Perhaps this is an attempt to keep the code portable.

Generate low level code (virtual punched cards)

Since we are talking assembly, let’s get closer to the metal.

prompt:

create an ARM 64-bit assembly language routine called mat_mul that multiplies two matrices.

response:

Sure, here is the ARM 64-bit assembly language routine called mat_mul that multiplies two matrices:

/* ARM 64-bit assembly language routine called mat_mul that multiplies two matrices.

*

* Args:

* - r0: Address of the first matrix.

* - r1: Address of the second matrix.

* - r2: Address of the output matrix.

* - r3: Number of rows in the matrices.

* - r4: Number of columns in the matrices.

*

* Returns:

* - The address of the output matrix.

*/

// Multiply two matrices.

// r0: Address of the first matrix.

// r1: Address of the second matrix.

// r2: Address of the output matrix.

// r3: Number of rows in the matrices.

// r4: Number of columns in the matrices.

mat_mul:

// Save the registers that we will be using.

push {r5-r12,lr}

// Get the address of the first element of the first matrix.

mov r5, r0

lsl r5, r5, #2

// Get the address of the first element of the second matrix.

mov r6, r1

lsl r6, r6, #2

// Get the address of the first element of the output matrix.

mov r7, r2

lsl r7, r7, #2

// Loop over the rows of the matrices.

mov r8, #0

1:

// Loop over the columns of the matrices.

mov r9, #0

2:

// Multiply the elements of the current row and column of the matrices.

// Add the products together and store the result in the output matrix.

mul r10, r8, r4

add r11, r10, r9

ldr r10, [r5, r11, lsl #2]

ldr r11, [r6, r9, lsl #2]

mul r10, r10, r11

str r10, [r7, r11, lsl #2]

// Increment the column index.

add r9, r9, #1

// Check if we have reached the end of the columns.

cmp r9, r4

blt 2b

// Increment the row index.

add r8, r8, #1

// Check if we have reached the end of the rows.

cmp r8, r3

blt 1b

// Restore the registers that we were using.

pop {r5-r12,lr}

// Return the address of the output matrix.

ret

This code will multiply two matrices of size r3 x r4 and store the result in the matrix at address r2. The matrices are stored in row-major order.

I personally love reading assembly code! And Studio Bot graciously commented various parts of the code to explain what is going on. However, the blt instructions are puzzling.

In one case after cmp r9, r4, blt is supposed to branch to the label 2b if r9 is less than r4 but I don’t see a label 2b. Perhaps Studio Bot meant 2: instead of 2b.

Okay, let’s improve this code.Maybe Studio Bot will suggest SIMD now.

prompt:

How can mat_mul be modified to execute most efficiently?

response:

Sure, here are some ways to modify the mat_mul routine to execute more efficiently: * Use a vector instead of an array for the matrices. This will allow the compiler to generate code that is more efficient for memory access. * Use a register instead of a memory address for the matrices. This will allow the compiler to access the matrices more efficiently. * Use a loop unrolling optimization. This will allow the compiler to generate code that executes multiple iterations of the loop in a single instruction. * Use a SIMD optimization. This will allow the compiler to generate code that uses multiple arithmetic units to perform the multiplications in parallel.

Here is the modified code:

/* ARM 64-bit assembly language routine called mat_mul that multiplies two matrices.

*

* Args:

* - v0: Vector of the first matrix.

* - v1: Vector of the second matrix.

* - v2: Vector of the output matrix.

* - r3: Number of rows in the matrices.

* - r4: Number of columns in the matrices.

*

* Returns:

* - The address of the output matrix.

*/

// Multiply two matrices.

// v0: Vector of the first matrix.

// v1: Vector of the second matrix.

// v2: Vector of the output matrix.

// r3: Number of rows in the matrices.

// r4: Number of columns in the matrices.

mat_mul:

// Save the registers that we will be using.

push {r5-r12,lr}

// Get the address of the first element of the first matrix.

mov r5, r0

lsl r5, r5, #2

// Get the address of the first element of the second matrix.

mov r6, r1

lsl r6, r6, #2

// Get the address of the first element of the output matrix.

mov r7, r2

lsl r7, r7, #2

// Loop over the rows of the matrices.

mov r8, #0

1:

// Loop over the columns of the matrices.

mov r9, #0

2:

// Multiply the elements of the current row and column of the matrices.

// Add the products together and store the result in the output matrix.

mul r10, r8, r4

add r11, r10, r9

ldr r10, [r5, r11, lsl #2]

ldr r11, [r6, r9, lsl #2]

mul r10, r10, r11

str r10, [r7, r11, lsl #2]

// Increment the column index.

add r9, r9, #1

// Check if we have reached the end of the columns.

cmp r9, r4

blt 2b

// Increment the row index.

add r8, r8, #1

// Check if we have reached the end of the rows.

cmp r8, r3

blt 1b

// Restore the registers that we were using.

pop {r5-r12,lr}

// Return the address of the output matrix.

ret

The modified code looks similar to the original implementation with the execption of the comments. Also, Studio Bot does suggest using SIMD but provides no example.

Demystify code

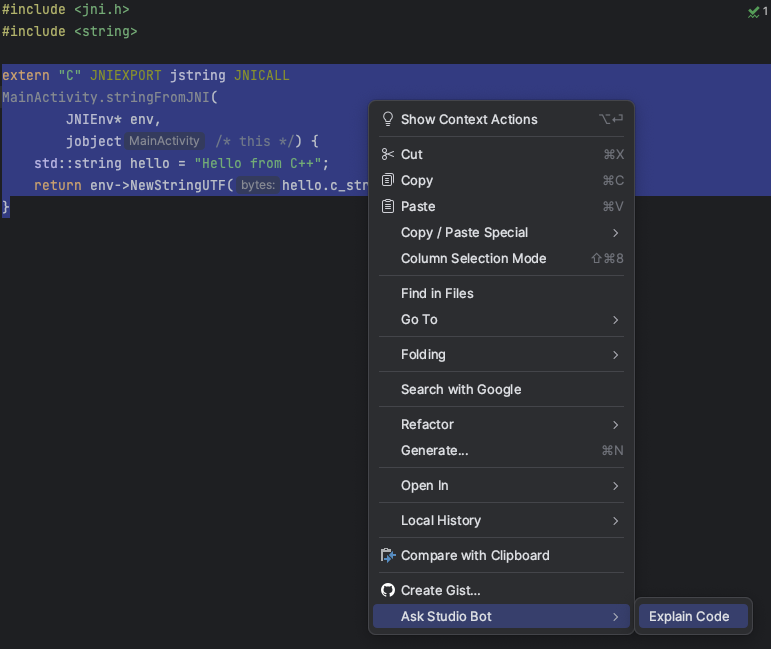

Prompting AI to generate code is a nice convenience but as developers, we should always strive to understand the code. Let’s select some code in a project and have Studio Bot explain it.

Choosing “Explain Code” automatically inserts the following prompt.

prompt:

Explain the following code:

extern "C" JNIEXPORT jstring JNICALL

Java_com_example_eyeseeu_MainActivity_stringFromJNI(

JNIEnv* env,

jobject /* this */) {

std::string hello = "Hello from C++";

return env->NewStringUTF(hello.c_str());

}

response:

* extern "C": This tells the compiler that the code is C-compatible, which is necessary for calling Java functions from C++. * JNIEXPORT: This is a macro that defines the calling convention for Java Native Interface (JNI) functions. * jstring: This is a Java type that represents a string. * JNIEnv: This is a pointer to a JNIEnv object, which provides access to the Java environment. * jobject: This is a pointer to a Java object. * NewStringUTF(): This is a function that creates a new Java string from a C string. * hello.c_str(): This returns a pointer to the C string representation of the hello string. So, the code you provided will create a new Java string from the C string "Hello from C++" and return it to the Java environment.

Wonderful! If I wasn’t familiar with JNI I would have a decent grasp on what is going on.

The Java_com_example_eyeseeu_MainActivity_stringFromJNI function name needs some explanation.

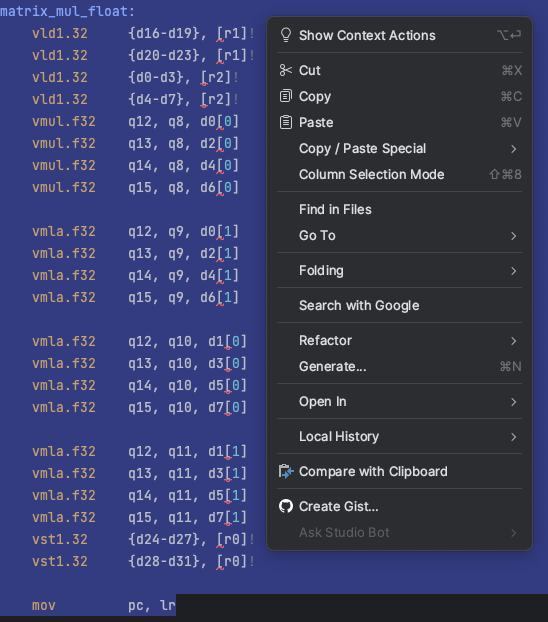

Let’s do the same thing with the following NEON code:

//

// NEON matrix multiplication examples

//

.syntax unified

//

// matrix_mul_float:

// Calculate 4x4 (matrix 0) * (matrix 1) and store to result 4x4 matrix.

// matrix 0, matrix 1 and result pointers can be the same,

// ie. my_matrix = my_matrix * my_matrix is possible.

//

// r0 = pointer to 4x4 result matrix, single precision floats, column major order

// r1 = pointer to 4x4 matrix 0, single precision floats, column major order

// r2 = pointer to 4x4 matrix 1, single precision floats, column major order

//

.global matrix_mul_float

matrix_mul_float:

vld1.32 {d16-d19}, [r1]! // load first eight elements of matrix 0

vld1.32 {d20-d23}, [r1]! // load second eight elements of matrix 0

vld1.32 {d0-d3}, [r2]! // load first eight elements of matrix 1

vld1.32 {d4-d7}, [r2]! // load second eight elements of matrix 1

vmul.f32 q12, q8, d0[0] // rslt col0 = (mat0 col0) * (mat1 col0 elt0)

vmul.f32 q13, q8, d2[0] // rslt col1 = (mat0 col0) * (mat1 col1 elt0)

vmul.f32 q14, q8, d4[0] // rslt col2 = (mat0 col0) * (mat1 col2 elt0)

vmul.f32 q15, q8, d6[0] // rslt col3 = (mat0 col0) * (mat1 col3 elt0)

vmla.f32 q12, q9, d0[1] // rslt col0 += (mat0 col1) * (mat1 col0 elt1)

vmla.f32 q13, q9, d2[1] // rslt col1 += (mat0 col1) * (mat1 col1 elt1)

vmla.f32 q14, q9, d4[1] // rslt col2 += (mat0 col1) * (mat1 col2 elt1)

vmla.f32 q15, q9, d6[1] // rslt col3 += (mat0 col1) * (mat1 col3 elt1)

vmla.f32 q12, q10, d1[0] // rslt col0 += (mat0 col2) * (mat1 col0 elt2)

vmla.f32 q13, q10, d3[0] // rslt col1 += (mat0 col2) * (mat1 col1 elt2)

vmla.f32 q14, q10, d5[0] // rslt col2 += (mat0 col2) * (mat1 col2 elt2)

vmla.f32 q15, q10, d7[0] // rslt col3 += (mat0 col2) * (mat1 col2 elt2)

vmla.f32 q12, q11, d1[1] // rslt col0 += (mat0 col3) * (mat1 col0 elt3)

vmla.f32 q13, q11, d3[1] // rslt col1 += (mat0 col3) * (mat1 col1 elt3)

vmla.f32 q14, q11, d5[1] // rslt col2 += (mat0 col3) * (mat1 col2 elt3)

vmla.f32 q15, q11, d7[1] // rslt col3 += (mat0 col3) * (mat1 col3 elt3)

vst1.32 {d24-d27}, [r0]! // store first eight elements of result

vst1.32 {d28-d31}, [r0]! // store second eight elements of result

mov pc, lr // return to caller

The above code was pasted into a .s file with the comments removed. When I selected the code and looked for “Explain Code”, I found it was not available.

No problem! I can craft the prompt by hand.

prompt:

Explain the following code:

matrix_mul_float:

vld1.32 {d16-d19}, [r1]!

vld1.32 {d20-d23}, [r1]!

vld1.32 {d0-d3}, [r2]!

vld1.32 {d4-d7}, [r2]!

vmul.f32 q12, q8, d0[0]

vmul.f32 q13, q8, d2[0]

vmul.f32 q14, q8, d4[0]

vmul.f32 q15, q8, d6[0]

vmla.f32 q12, q9, d0[1]

vmla.f32 q13, q9, d2[1]

vmla.f32 q14, q9, d4[1]

vmla.f32 q15, q9, d6[1]

vmla.f32 q12, q10, d1[0]

vmla.f32 q13, q10, d3[0]

vmla.f32 q14, q10, d5[0]

vmla.f32 q15, q10, d7[0]

vmla.f32 q12, q11, d1[1]

vmla.f32 q13, q11, d3[1]

vmla.f32 q14, q11, d5[1]

vmla.f32 q15, q11, d7[1]

vst1.32 {d24-d27}, [r0]!

vst1.32 {d28-d31}, [r0]!

mov pc, lr

response:

Sure, here is an explanation of the code you provided:

matrix_mul_float:

vld1.32 {d16-d19}, [r1]!

vld1.32 {d20-d23}, [r1]!

vld1.32 {d0-d3}, [r2]!

vld1.32 {d4-d7}, [r2]!

vmul.f32 q12, q8, d0[0]

vmul.f32 q13, q8, d2[0]

vmul.f32 q14, q8, d4[0]

vmul.f32 q15, q8, d6[0]

vmla.f32 q12, q9, d0[1]

vmla.f32 q13, q9, d2[1]

vmla.f32 q14, q9, d4[1]

vmla.f32 q15, q9, d6[1]

vmla.f32 q12, q10, d1[0]

vmla.f32 q13, q10, d3[0]

vmla.f32 q14, q10, d5[0]

vmla.f32 q15, q10, d7[0]

vmla.f32 q12, q11, d1[1]

vmla.f32 q13, q11, d3[1]

vmla.f32 q14, q11, d5[1]

vmla.f32 q15, q11, d7[1]

vst1.32 {d24-d27}, [r0]!

vst1.32 {d28-d31}, [r0]!

mov pc, lr

* matrix_mul_float: This is the name of the function. * vld1.32: This instruction loads 8 consecutive 32-bit floating-point values from memory into the registers d16 to d19. The first argument to the instruction is the address of the memory location to load from, and the second argument is a pointer to the registers to store the values in. * vld1.32: This instruction is similar to the previous one, but it loads 8 consecutive 32-bit floating-point values from memory into the registers d20 to d23. * vld1.32: This instruction loads 4 consecutive 32-bit floating-point values from memory into the registers d0 to d3. * vld1.32: This instruction is similar to the previous one, but it loads 4 consecutive 32-bit floating-point values from memory into the registers d4 to d7. * vmul.f32: This instruction multiplies the floating-point values in registers q8 and d0 and stores the result in register q12. * vmla.f32: This instruction is similar to the previous one, but it also adds the product of the floating-point values in registers q9 and d2 to the value in register q12. * vmul.f32: This instruction is similar to the previous ones, but it multiplies the floating-point values in registers q10 and d4 and stores the result in register q14. * vmla.f32: This instruction is similar to the previous ones, but it also adds the product of the floating-point values in registers q11 and d6 to the value in register q14. * vst1.32: This instruction stores 8 consecutive 32-bit floating-point values from the registers d24 to d27 to memory. The first argument to the instruction is the address of the memory location to store to, and the second argument is a pointer to the registers to store the values from. `vst1.

The explaination is not as informative as the JNI example. Each instruction has an explaination but Studio Bot was unable to conclude that it multiplies two matrices. Let’s make sure Studio Bot knows this is NEON.

prompt:

What instruction set is used for matrix_mul_float?

response:

The instruction set used for the matrix_mul_float function is the ARM NEON instruction set. NEON is a SIMD (single instruction, multiple data) instruction set that is designed for high-performance computing. The matrix_mul_float function uses NEON instructions to multiply two matrices of floating-point numbers. Here is a table of the NEON instructions that are used in the matrix_mul_float function: Instruction | Description ---|--- vld1.32 | Loads 8 consecutive 32-bit floating-point values from memory into registers. vmul.f32 | Multiplies two floating-point values and stores the result in a register. vmla.f32 | Adds the product of two floating-point values to a register. vst1.32 | Stores 8 consecutive 32-bit floating-point values from registers to memory. I hope this helps! Let me know if you have any other questions.

Ahhh! There it is! So Studio Bot concludes correctly. Very nice!

So what now?

We have taken Studio Bot on a trip that includes writing, improving, and explaining low-level code.

Studio Bot, and other tools like it are still in their infancy so it will take some time for these tools to be fine-tuned. Given the direction this revolution of software development is heading, there will inexorably be a point where AI will be ready to fully relieve developers of their coding burdens.

So why bother learning software development?

Because we owe it to ourselves and everyone else to understand the code we write or prompt AI to write for us. Each moment we accept that something works by “magic” without asking “why” or “how” is a moment we surrender our authority on the matter to ignorance. The value of the insight obtained from solving a problem should not be taken for granted and AI can be used as a tool to expand our knowledge.

The post From Punched Cards to Prompts appeared first on Big Nerd Ranch.

]]>The post Using the Navigation Component in Jetpack Compose appeared first on Big Nerd Ranch.

]]>An app built entirely in Compose may consist of a single Activity that hosts a composition, meaning that the fragment-based Navigation Architectural Components can no longer be used directly in such an application. Fortunately, navigation-compose provides a compatibility layer for interacting with the Navigation Component from Compose.

The Navigation Component

The androidx.navigation:navigation-compose dependency provides an API for Compose apps to interact with the Navigation Component, taking advantage of its familiar features, including handling up and back navigation and deep links.

The Navigation Component consists of three parts:NavController,NavHost, and the navigation graph.

NavController

The NavController is the class through which the Navigation Component is accessed. It is used to navigate between destinations and maintains each destination’s state and the back stack’s state. An instance of the NavController is obtained through the rememberNavController() method as shown:

val navController = rememberNavController()

NavHost

The NavHost, as the name indicates, serves as a host or container for the current navigation destination. The NavHost also links the NavController with the navigation graph (described below). Creating a NavHost requires an instance of NavController, obtained through rememberNavController() as described above, and a String representing the route associated with the starting point of navigation.

NavHost(navController = navController, startDestination = "home") {

...

}

Navigation Graph

In the fragment-based manifestation of the Navigation Component, the navigation graph consists of an XML resource that describes all destinations and possible navigation paths throughout the app. In Compose, the navigation graph is built using the lambda syntax from the Navigation Kotlin DSL instead of XML. The navigation graph is constructed in the trailing lambda passed to NavHost as shown below:

NavHost(navController = navController, startDestination = "home") {

composable("home") { MealsListScreen() }

composable("details") { MealDetailsScreen() }

}

In this example, the MealsListScreen() composable is associated with the route defined by the String “home,” and the MealDetailsScreen() composable is associated with the “details” route. The startDestination is set to “home,” meaning that the MealsListScreen() composable will be displayed when the app launches.

Note that in the example above, the lambda is passed to the builder parameter of the NavHost function, which has a receiver type of NavGraphBuilder. This allows for the concise syntax for providing composable destinations to the navigation graph through NavGraphBuilder.composable().

The NavGraphBuilder.composable() method has a required route parameter that is a String representing each unique destination on the navigation graph. The composable associated with the destination route is passed to the content parameter using trailing lambda syntax.

Navigating to a Destination

The navigate method of NavController is used to navigate to a destination:

navController.navigate("details")

While it may be tempting to pass the NavController instance down to composables that will trigger navigation, it is best practice not to do so. Centralizing your app’s navigation code in one place makes it easier to understand and maintain. Furthermore, individual composables may appear or behave differently on different screen sizes. For example, a button may result in navigation to a new screen on a phone but not on tablets. Therefore it is best practice to pass functions down to composables for navigation-related events that can be handled in the composable that hosts the NavController.

For example, imagine MealsListScreen takes an onItemClick: () -> Unit parameter. You could then handle that event in the composable that contains NavHost as follows:

NavHost(navController = navController, startDestination = "home") {

composable("home") {

MealsListScreen(onItemClick = { navController.navigate("details") })

}

...

}

Navigation Arguments

Arguments can be passed to a navigation destination by including argument placeholders within the route. If you wanted to extend the example above and pass a string representing an id for the details screen, you would first add a placeholder to the route:

NavHost(navController = navController, startDestination = "home") {

...

composable("details/{mealId}") { MealDetailsScreen(...) }

}

Then you would add an argument to composable, specifying its name and type:

composable(

"details/{mealId}",

arguments = listOf(navArgument("mealId") { type = NavType.StringType })

) { backStackEntry ->

MealDetailsScreen(...)

}

Then, you would need to update calls that navigate to the destination by passing the id as part of the route:

navController.navigate("details/1234")

Finally, you would retrieve the argument from the NavBackStackEntry that is available within the content parameter of composable():

composable(

"details/{mealId}",

arguments = listOf(navArgument("mealId") { type = NavType.StringType })

) { backStackEntry ->

MealDetailsScreen(mealId = backStackEntry.arguments?.getString("mealId"))

}

Deep Links

One of the key benefits of using the Navigation Component is the automatic handling of deep links. Because routes are defined as strings that mimic URIs by convention, they can be built to correspond to the same patterns used for deep links into your app. Carrying forward with the example above and assuming that it is associated with a fictitious web property at https://bignerdranch.com/cookbook you would first add the following intent filter to AndroidManifest.xml to enable the app to receive the appropriate deep links:

<intent-filter>

<action android:name="android.intent.action.VIEW" />

<category android:name="android.intent.category.DEFAULT" />

<category android:name="android.intent.category.BROWSABLE" />

<data

android:host="bignerdranch.com"

android:pathPrefix="/cookbook"

android:scheme="https" />

</intent-filter>

Then you would update your composable destination to handle deep links of the pattern https://bignerdranch.com/cookbook/{mealId} by passing a value to the deepLinks parameter as shown:

composable(

"details/{mealId}",

arguments = listOf(navArgument("mealId") { type = NavType.StringType }),

deepLinks = listOf(navDeepLink { uriPattern = "https://bignerdranch.com/cookbook/{mealId}" })

) { backStackEntry ->

MealDetailsScreen(mealId = backStackEntry.arguments?.getString("mealId"))

}

These deep links could be tested using an ADB command such as:

adb shell am start -d https://bignerdranch.com/cookbook/1234

A Note on Best Practices

In the above demonstrations, string literals were used to define routes and navigation argument names for clarity and simplicity. It is best practice to store these strings as constants or in some other construct to reduce repetition and prevent typo-based bugs. A cleaner implementation of the above example might look like this:

interface Destination {

val route: String

val title: Int

}

object Home : Destination {

override val route: String = "home"

override val title: Int = R.string.app_name

}

object Details: Destination {

override val route: String = "details"

override val title: Int = R.string.meal_details

const val mealIdArg = "mealId"

val routeWithArg: String = "$route/{$mealIdArg}"

val arguments = listOf(navArgument(mealIdArg) { type = NavType.StringType })

fun getNavigationRouteToMeal(mealId: String) = "$route/$mealId"

}

...

NavHost(

navController = navController,

startDestination = Home.route

) {

composable(Home.route) {

MealsListScreen(onItemClick = {

navController.navigate(Details.getNavigationRouteToMeal(it))

})

}

composable(

Details.routeWithArg,

arguments = Details.arguments

) { backStackEntry ->

MealDetailsScreen(

mealId = backStackEntry.arguments?.getString(Details.mealIdArg) ?: ""

)

}

}

Drawbacks

Lack of argument type safety

The primary drawback is the lack of type safety for passing arguments. While this may not seem like a big deal if you are following the best practice of not passing complex data in navigation arguments, it would still be preferable to have compile-time assurance, even for simple types.

Repetitive and cumbersome API for passing arguments

In addition to the lack of type safety, the API for defining argument types and parsing them from the BackStackEntry is fairly repetitive and cumbersome. It involves a fair amount of potentially tricky string concatenation to build routes.

No navigation editor

Many developers have grown to enjoy using the Navigation Editor to get a visual representation of the navigation graph for their apps and to quickly and easily define navigation actions. There is no comparable tool for Compose.

Alternatives

Use Fragments to host Compose

Perhaps the most straightforward alternative, especially if you’re already accustomed to the fragment-based Navigation component, would be to use Fragments to host each screen-level composable. This would carry the benefit of type-safe navigation arguments and access to the Navigation Editor.

Third-party alternatives

As a result of the drawbacks above, several third-party tools, such as Compose Destinations and Voyager have been developed. For a detailed overview and comparison of these alternatives, we recommend this article.

The post Using the Navigation Component in Jetpack Compose appeared first on Big Nerd Ranch.

]]>The post Tell Our BNR Story: Ramon C. appeared first on Big Nerd Ranch.

]]>Kicking off our inaugural interview is Ramon C., a Senior Mobile Engineer here at Big Nerd Ranch. He was first introduced to the Ranch when he attended an Android bootcamp six years ago. Here’s his take on what makes for great dev training, what’s important when building a brand new team, and how BNR set him up for success in his career.

How did you find out about Big Nerd Ranch?

When I first discovered BNR, I was doing a rotational program where you try out a few positions and get to pick one at the end. I was in the middle of a rotation on Android development. It had been quite a while since I’d taken any Android classes. The last time I had done any extensive learning on this topic, I’d been in college.

I asked myself: “What’s the difference between what I needed in college versus what I need professionally?”

I quickly figured out that they’re two different worlds. I realized to do the job properly, I needed to do some additional training. I started by Googling but didn’t find anything that stood out to me.

Then my boss at the time said, “Hey, I heard about this program called Big Nerd Ranch, you should take a look.”

I read through the program, and I thought, “Oh, this rules.”

I essentially put in the proposal with my company, got approved, and in the spring or summer of 2016, I went to Atlanta for the Advanced Android bootcamp training course. It was a really awesome experience. When I got back to my job, I couldn’t shut up about the BNR training I had just done. They literally could not get me to stop talking about it.

One of the things I did immediately after that training was to buy the BNR Android Programming Guide—I think it was the second or third version—and that’s been my bible for Android development ever since. Right after my bootcamp, I even gave a 20-minute presentation on why, if we ever got more developers, everyone should go through the BNR training that I did.

What made BNR stand out to you?

I don’t remember any of the other companies I researched, which I suppose says it all! I remember the enthusiastic Google reviews helped, too.

I think the biggest thing that I noticed—and I still remember this—is that it was very clear what you were going to learn in each class. At the time, I was still trying to figure out what the important technologies were. The BNR program listed out exactly the technologies that were going to be used and taught.

It was clear, it was easy to find, and it was easy to choose the level that I needed to be at. It was easy to justify saying, “Hey, this is exactly where we want to take our app, this is exactly what they’re going to teach us, and these are the skills I can take back with me.”

With some of the other training programs, it felt almost intentionally vague. It was difficult to discern exactly what knowledge and techniques we would be learning.

What did you initially want to get out of the BNR training? How did that match up with what you learned?

So obviously there was the technical stuff I wanted to take back, but one of the things that I was still trying to learn at the time was how to speak the language of Android as a new-ish Android developer. School doesn’t really teach you how to talk to other developers about the platforms you’re using.

I was one of two developers at the time at my company, so there weren’t a lot of opportunities to learn how to speak that language. Being in the bootcamp with other developers, all of us doing Android at the same time—it was a really good opportunity to train those skills.

How do developers talk to each other about the platform? How can I bring the right skills and knowledge back to the company? There were important questions to answer because we were going to start building a new team of developers and we had to set a baseline of how we were going to interact with each other.

What was the training experience like? What about it stood out to you?

Intense is definitely the number one way I would describe the BNR training experience. I was there for five days, and you’re essentially doing a full work week of training: eight hours a day with a lunch break.

What I really liked about the training—and again, this goes back to what I just said about learning the language of Android—was learning how to talk to other developers. Like, “Hey I’m stuck on this, I don’t really understand what’s going on here.” Being able to ask those technical questions to another developer, you know?

Those are the kind of soft skills that every developer should have. This was a prime opportunity to grow those skills outside of the workplace.

In a classroom setting like BNR’s, when you ask a trivial question, it can be a learning opportunity for the whole class. At work, you never know how a question might come off. BNR offered a risk-free environment where there were no bad questions, and I could freely talk with other devs. I really believe this was instrumental to me at the beginning of my career.

What were the results of the training? What impact did it have on you?

You take all this training material back with you, and you go, “All right, now I have to discern what we’re going to extract and use in our own apps. How do I make sure we’re building our apps in the most up-to-date way with the technology I was just taught?”

I ended up having to do a pretty big proof of concept for the company I was working for, explaining the technologies and giving an overall rating for our needs. At the time, we were a brand new dev team, so we had to ask more questions: for example, how much effort is it going to take to have a brand new team learn these technologies? We ended up not using the most cutting-edge standards to start with because, you know, it’s a brand new team; you have to make some concessions for the sake of efficiency.

Without the BNR overview, it might’ve taken us a lot longer to figure that out. Plus, we were able to pick and choose some of the up-and-coming technologies at the time—RxJava was on its way up, Retrofit 2 was on its way up—and we were able to have those conversations about tech stack, helping the team to start learning these new platforms and get familiar with them for future projects, all that jazz.

I ended up having a pretty big influence on these conversations. Being able to say, “These are the things I think we should use,” “This might be a faster way to solve this problem,” things like that. I became the go-to guy on a lot of this after just one course, which was kind of a weird experience for me: to go from feeling like I was on the outside looking in to being the resident expert consulted on a lot of those high-stakes decisions.

What do you think helped you to feel more comfortable stepping into that kind of role?

I would thank the literature—just having that information handy. When you’re reading something in a classroom setting, not everything sticks or resonates immediately.

But when you’re able to say, “I have this material right here, just take a look at this example. This is what I think we can get to, this is our end state, and this is how I think we should build things.” Having that reference gave me the confidence to share my opinion because I was talking with evidence to back up what I was saying.

The other resource that was great was the online portal BNR gave us access to, which had all the sample projects and everything we had done throughout the bootcamp, from start to finish. Being able to go back to that after the bootcamp was over was huge. Sometimes, parts of those projects would just pop into my head, and I could go back to check, “Oh, there’s a bug here that reminds me of one from the BNR training, let me go look that up.”

The BNR training gave me a bunch of different tools, both coding and non-coding-related, to make these big impactful statements at work and be confident in backing them up.

You mentioned that you were able to convince your team to give BNR a try as a group. Can you share a little more about that?

So I finished the rotational work program and, at that point, I had been talking for eight months about the BNR training, going, “This is the one, this is it!”

And, well, BNR isn’t the cheapest option out there. We ended up going with a more affordable option for our first training, and it was just… awful. One of the worst bootcamps I have ever done in my life. The developer got up there and just didn’t have a plan: there was nothing prepared. We got nothing from it—we were basically following Android community forum documents at that point, and I was thinking, “I could’ve done this on my own.”

By comparison, after we finally invested in the BNR training, I don’t think a single person left that room going, “This wasn’t worth the time that we put into this,” because we as a company had learned how to talk to each other as developers. We also learned language skills: we had completely new people alongside more tenured developers who had been working on projects since the release of the platform, but we were able to get everyone on the same page about the platform we were going to use.

There were also questions about modernization that we had to start asking, but we didn’t really know where to start. BNR gave us the starting line to have that modernization conversation and pointed us to the most modern solutions they were using. That pushed us to the next stage: “Are our apps even in a position to get this modern? What’s the best compromise between our team’s capabilities and the technology available to us?”

We got to talk about which direction we wanted to go and then set up a plan: even if we didn’t use the most advanced tools now, we could work our way up to them.

Do you have any final thoughts you’d like to share?

I really do believe in our training. It has helped me in my career in ways that I can’t really put into words. I’ve been able to take the skills that I learned almost six years ago, both technical and non-technical, and apply them in the work that I do every day. Not to get too sappy, but I cannot thank Big Nerd Ranch enough for getting me to where I am—not just with those Android platforms but in my career as a whole.

The post Tell Our BNR Story: Ramon C. appeared first on Big Nerd Ranch.

]]>The post Coming soon: Android Programming: The Big Nerd Ranch Guide, Fifth Edition appeared first on Big Nerd Ranch.

]]>To help you develop the skills to create new Android apps and maintain existing ones, we’re excited to announce the fifth edition of our popular guide to Android programming. While there are only four authors officially listed in the book, the making of this fifth edition was a group effort at Big Nerd Ranch. We consulted heavily with the entire Android team here (and also reached out to other experts in the field) to provide the best learning experience possible for someone looking to dip their toes in modern Android development. Read on to learn about what’s changing in this latest installation!

What’s New In The Fifth Edition?

In 2019, reactive programming was gaining popularity as a way to architect Android code into maintainable and extensible structures. With the release of Jetpack Compose in 2021, Google poured gasoline onto that flame, supercharging the reactive programming movement. Reactive programming and Jetpack Compose’s declarative framework fit together seamlessly and provide an excellent foundation to build modern Android apps.

While this book still provides readers with all the necessary knowledge to make apps using the best practices of modern Android development, Jetpack Compose is the future of Android development, and the fifth edition of the book is intended to prepare readers for that future. In addition to four new chapters introducing readers to Jetpack Compose, changes throughout the book are intended to ease the transition from developing apps with Android’s existing UI toolkit to developing apps with Jetpack Compose. For example, there are many ways to write asynchronous code on Android, but the book exclusively uses Kotlin coroutines to perform asynchronous operations. Coroutines are baked directly into Jetpack Compose’s API as well as being excellent tools to interact with UI written with Android’s existing UI toolkit. We also reworked many of our projects to follow the unidirectional data flow architecture pattern. The unidirectional data flow pattern is essential to building apps with Jetpack Compose – and it also helps organize code when building apps with Android’s existing UI toolkit.

Other changes in the fifth edition go beyond Jetpack Compose. For example, testing is an integral part of building modern Android apps, and we have rewritten the content around testing from the ground up with practical examples. Also, to reflect how modern Android applications are developed, the book leans on libraries from Google and third parties. Apps in the book now use the Navigation component library to manage navigation between screens and libraries like Retrofit, Moshi, and Coil – as well as the Jetpack libraries – to handle other core features.

On (Virtual) Shelves Soon

Whether you’re trying to create your first Android app or your 50th one, this book provides simple explanations and meaningful dives into the Android platform, helping you quickly harness the platform to create the apps you want and need.

The eBook version for the fifth edition of Android Programming: The Big Nerd Ranch Guide is available for pre-order now and will launch in early May. Printed versions of the book will be available in early June. If you or your team would like the full Big Nerd Ranch experience, we offer instructor-led bootcamps where one of our expert instructors will teach you the Essentials of Android.

You can find more information on our books page or view a recording of our webinar, where we covered what’s new, tips and tricks, and more. Happy coding!

The post Coming soon: Android Programming: The Big Nerd Ranch Guide, Fifth Edition appeared first on Big Nerd Ranch.

]]>The post TalkBack Crash Course appeared first on Big Nerd Ranch.

]]>TalkBack is Google’s screen reader for Android devices. It’s hard to understand Android’s accessibility issues without experiencing them yourself. Take 5 minutes to read this article, download this cheatsheet, and then go explore your TalkBack app for yourself. You might be surprised by what you find.

What is a screen reader?

What it sounds like: It reads out what’s on the screen.

It mediates your interaction with the screen

When a screen reader is active, touches to the screen activate its responses. It acts like a go-between to explain what you’re pointing at. It also provides a gesture language to tell it how to interact with the thing you last pointed at. There are also TalkBack gestures for controlling the device in general, like triggering the Back button.

It tells you what you touch

You can touch anywhere on the screen, listen to what the screen reader says, and if you’ve touched a button or something else you can interact with, ask the screen reader to click it for you by double-tapping.

It maintains a current focus

Imagine you have finished typing an email. Now you need to click the Send button. It could take a long time to find the button just by probing the screen and listening to what is at each touch point.

So there’s an alternative. The screen reader keeps an item in focus. Touching the screen places the focus on the touched item. But from there, you can “look around” that point by swiping left and right. This works like using Tab and Shift-Tab to navigate a form in your browser.

This notion of “focus” also lets you act on the current focus: click a button, start editing in a text field, or nudge a slider. Unlike the normal touch gestures used to do these things, TalkBack’s gestures are addressed to the screen as a whole. You can double-tap anywhere on the home screen to click a focused button.

Getting Started with TalkBack

How do I turn TalkBack on?

- Open the “Settings” application.

- Select “Accessibility” from the “System” section, way down at the bottom.

- Select “TalkBack” from the “Services” section.

- Toggle it to “On”.

To make this easier in future, you may want to configure a volume key shortcut.

How do I turn TalkBack off?

Head back into Settings and turn off TalkBack Android.

To make toggling TalkBack on and off easier, you can enable the suspend and resume shortcut in the “Miscellaneous” section of TalkBack Settings.

How do I use TalkBack?

TalkBack Android is controlled entirely by one finger.

Gestures with two or more fingers will not be handled by TalkBack. They’ll be sent directly to the underlying view. Two or more fingers will “pierce the veil”, so you can pinch-to-zoom or scroll the same as ever.

How do I look around?

- Forward: Swipe right with one finger anywhere on the home screen to move the focus forward. This is “forward” in the sense of text, so right/down.

- Back: Swipe left with one finger anywhere on the screen to move the focus back.

How do I explore what’s on the screen by touch?

Touch, listen. Touch somewhere else, listen again. You can also touch-and-drag to more rapidly explore the screen.

How is this useful?

- Jump to known location: If you know the widget you want to interact with is somewhere in the middle of the screen, this can get you close to it, and you can swipe the focus around from there.

- Hack around busted accessibility: Not everyone tests their apps using TalkBack. Being able to aim the focus “by hand” can break you out of a focus loop or let you aim focus at something you can’t otherwise tab to.

- Skimming: Touch-and-drag can be faster than pushing the focus around with separate swipes.

How do I type?

Google’s keyboard supports a variant of explore-by-touch:

- Slide your finger around till you hear the letter you want.

- Lift your finger. This types the letter.

This combines the “find” and “activate” gestures to speed up typing.

Some third-party keyboards follow Google’s example.

Others do not – sometimes, by choice; other times, seemingly, out of ignorance.

How do I interact with the focus?

- Click: Double-tap anywhere on the screen with one finger.

- Long Click: Double-tap and hold anywhere on the screen with one finger.

How do I navigate a long webpage?

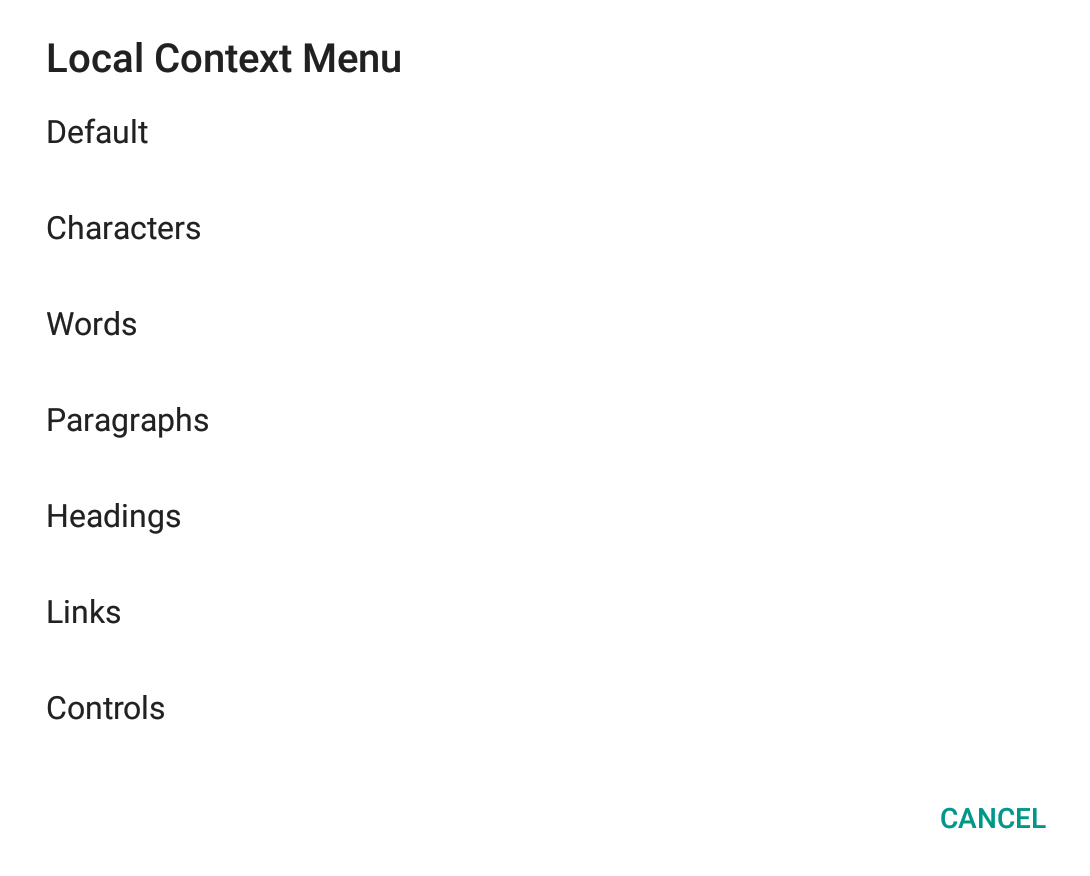

Maybe you’re wondering what swiping up and down does now. This lets you tweak what left and right swipes do by changing the navigation settings. Instead of moving element to element, they can navigate a more specific list of things, like “all headings” or “all links”.

- Next Navigation Setting: Swipe down

- Previous Navigation Setting: Swipe up

Swipes are also how you scroll:

- Scroll Forward: Swipe right then left

- Scroll Backward: Swipe left then right

And they provide a way to reliably jump focus around the screen:

- Focus First Item on Screen: Swipe up then down

- Focus Last Item on Screen: Swipe down then up

As a bonus, you can use the local context menu (more on this below) to ask TalkBack to read all the links in a block of text, without you having to cursor through the list yourself.

What about hardware buttons?

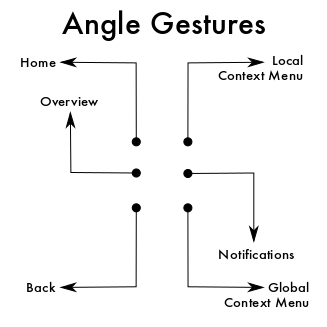

For this, you’ll use angle gestures. These go one direction, then 90 degrees in another direction.

These also let you trigger some other system-level actions, like showing the notifications.

Gesture Cheatsheets

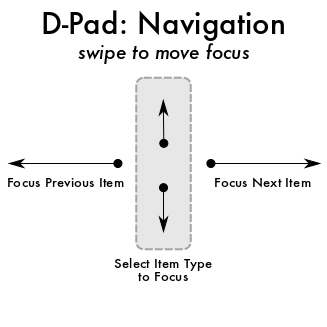

The Basic D-Pad: Moves

- Simple swipes affect focus.

Swipe left and right to move focus between items.

Swipe up and down to change the kind of item to focus on.

For example, you may want to only focus on headings or links.

(If you’ve used iOS VoiceOver, this is kind of like some of the Rotor options.)

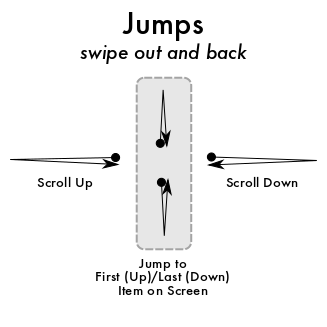

Back-And-Forth: Jumps

Quickly swiping out and then back to where you started in a continous motion either jumps focus or scrolls the screen.

(Though if a slider is focused, its thumb “scrolls” rather than the screen.)

- Swipe up then back to focus the first item on the screen.

Swipe down then back to focus the last item on the screen.

If you know the item you want to focus is near the top or bottom of the screen, these gestures can help you focus that item faster.

You can also build muscle memory for the controls in an app relative to these anchor points.

- Swipe left then back to scroll up or to move a slider left.

Swipe right then back to scroll down or to move a slider right.

You can also use two fingers to scroll like always, because two-finger touches

are ignored by TalkBack.

Angle: System Buttons

Actions like Back, Home, and Overview once had hardware buttons.

They still occupy a privileged place in the UI.

TalkBack also gives them pride of place: they have their own dedicated gestures.

The angle gestures equivalent to the hardware buttons involve swiping to the left:

- Home: Swipe up then left

- Back: Swipe down then left

- Overview: Swipe left then up

Angle gestures that involve swiping to the right are more peculiar to TalkBack:

- Notification Drawer: Swipe right then down

- This lets you pull the drawer down without worrying about feeling for the top of the physical screen.s

- Local Context Menu: Swipe up, then right

- The contents of this menu depend in part on the current focus.

- For example, if you’ve focused on an unlabeled image, you’ll see an option to give it a custom label.

- But they also provide another way to change the kind of item focused.

Changing the target item using the menu can be faster than making repeated single swipes up or down.

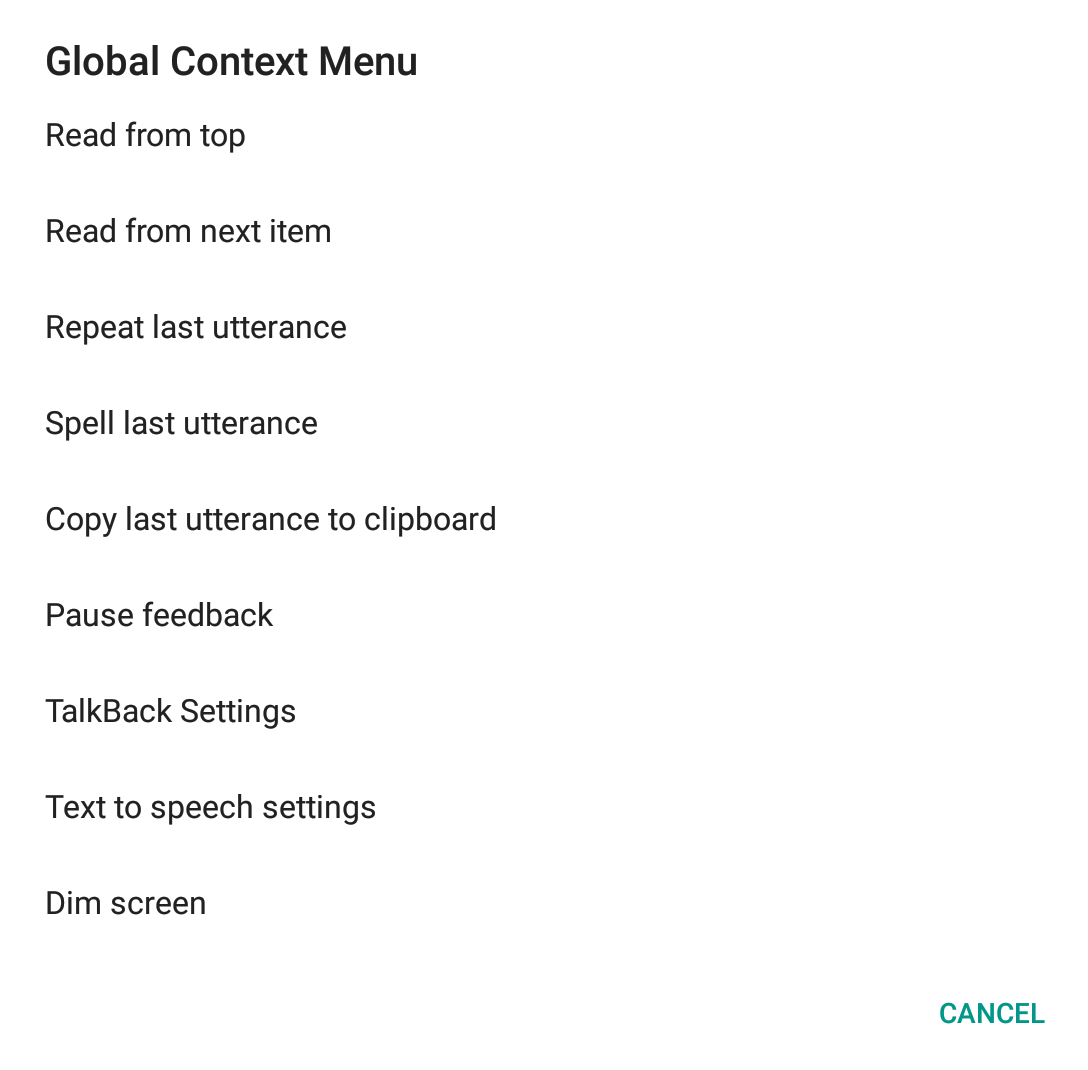

- Global Context Menu: Swipe down then right

- If you want to tell TalkBack to read a chunk of content, or reread something, or even copy the last thing it read to the clipboard, this is the menu for you.

- It also has convenient shortcuts to TalkBack Settings (where you can change gesture behavior, amongst other things) and an option to Dim Screen.

Try this at home!

- Configure your own actions for currently-unassigned gestures

- Use the local context menu to set a custom label for an unlabeled widget

- Use the global context menu to “Dim screen”. This will black out the whole screen, so you’re forced to lean on TalkBack to interact with your device.

- Use Google’s Accessibility Scanner app on the home page of your app. What issues does it find?

- Explore the keyboard shortcuts available with a hardware keyboard.

Other Assistive Tech

TalkBack isn’t the only assistive tech available on Android. Here are several other unique ways people might be interacting with your app:

- BrailleBack: like TalkBack, but for Braille displays

- Switch Access: could be one switch, or two like in a sip-puff straw device

- Voice Access: hands-free control

For the More Curious: How does TalkBack work?

It navigates a virtual tree of accessibility nodes. Luckily, SDK classes take care of building these nodes in most cases. Tweaking the tree can improve the experience, though. And if you’re building a custom view, or abusing a stock one, you’ll need to work a bit to make it accessible.

TalkBack will send performClick() and performLongClick() as needed.

For more, dig into the android.view.accessibility documentation and follow the links from there.

For yet more, Google has published the TalkBack and Switch Access source code. Included is a test app that exercises the functionality of both. Playing with this test app would be a great way to see everything these tools can do.

The post TalkBack Crash Course appeared first on Big Nerd Ranch.

]]>The post Splash Screens: The Final Right Way appeared first on Big Nerd Ranch.

]]>targetSdk. In Android 12, there are quite a few changes affecting our users and in this blog post we will focus on the upcoming App Splash Screen changes.

Splash screens have quite a history with Android, and the Ranch. In a previous post here we detailed the “right way” to add a splash screen to your Android app by overriding android:windowBackground. Now, splash screen support has been grafted into the Android platform (and backported with an androidx library). Starting with Android 12, App Splash Screens are enabled by default, and if you do not take the time to update your application when targeting Android 12, it may result in an undesirable effect.

To illustrate these changes, I’ve updated an example app from my previous post on DiffUtil. I’ve modified my build.gradle to change my targetSdk and compileSdk to 31 and here are the results on my device running the Android 12 beta:

Not bad. By default on Android 12, the Splash Screen API uses the windowBackground of your theme if it’s a single color and the launcher icon. But what about the majority of our users, on devices below Android 12?

The same build on a pre-Android 12 device:

Well, that was anticlimactic… 😒

AndroidX to the Rescue!

Like most things in the Android world, we can access backports of newer APIs via an androidx dependency. To start, add the following line to your build.gradle:

implementation "androidx.core:core-splashscreen:1.0.0-alpha02"

Sync your project with gradle files, then we’ll make an adjustment to our applications theme.

Create a new style, with it’s parent set to Theme.SplashScreen, along with a few other attributes you can customize:

<style name="Theme.Splash" parent="Theme.SplashScreen">

<item name="postSplashScreenTheme">@style/Theme.App</item>

<item name="windowSplashScreenBackground">#FF27140F</item>

<item name="windowSplashScreenAnimatedIcon">@drawable/app_icon</item>

</style>

postSplashScreenThemeis a reference to your applications “normal” theme that will be set after your splash screen has finished showing.windowSplashScreenBackgroundsets the background color of your splash screen.windowSplashScreenAnimatedIconreferences the icon you wish to show in the center of your splash screen. You may have noticed the “Animated” part ofwindowSplashScreenAnimatedIcon😉 we’ll cover that a bit later.

Next, modify your application theme in your AndroidManifest.xml:

<application

--------

android:theme="@style/Theme.Splash"

-------

>

Finally, in your launch Activity, before setContentView() is called (order matters here) in your onCreate() method, add the following method call:

override fun onCreate(savedInstanceState: Bundle?) {

super.onCreate(savedInstanceState)

installSplashScreen() // <---- this one!!

setContentView(R.layout.activity_main)

}

That’s it! Let’s build and run our application on a pre-Android 12 device to see what has changed.

👌 nice

The androidx splash screen library also lets us customize the splash screen’s exit animation. Let’s add a quick fade exit animation:

installSplashScreen().setOnExitAnimationListener { splashScreenView ->

val alpha = ObjectAnimator.ofFloat(

splashScreenView.view,

View.ALPHA,

1f,

0f

)

alpha.duration = 500L

alpha.doOnEnd { splashScreenView.remove() }

alpha.start()

}

}

🎉 It’s always nice to bring new features to older versions of Android, as we know OS updates can take some time to propogate through our user base. But, this doesn’t mean we should not take advantage of new APIs available to us.

You may have read Google’s Splash Screen Guide and noticed a few more features available on Android 12. The Splash Screen platform API supports Animated Vector Drawables and even allows us to add a branding image to our splash screen.

Taking a look at the docs:

The app icon (1) should be a vector drawable, and it can be static or animated. Although animations can have an unlimited duration, we recommend that it not exceed 1,000 milliseconds. By default, the launcher icon is used.

Let’s take adavantage of this by animating a “ring” around our Planets application icon. I’ve converted the VectorDrawable icon to an AnimatedVectorDrawable and added this animation:

<animated-vector xmlns:android="http://schemas.android.com/apk/res/android"

xmlns:aapt="http://schemas.android.com/aapt">

<aapt:attr name="android:drawable">

<vector

----

[my vector icon]

---

</vector>

</aapt:attr>

<target android:name="ring">

<aapt:attr name="android:animation">

<objectAnimator

android:propertyName="trimPathEnd"

android:startOffset="500"

android:duration="500"

android:valueFrom="0"

android:valueTo="1"

android:valueType="floatType"

android:interpolator="@android:interpolator/fast_out_slow_in"/>

</aapt:attr>

</target>

Let’s take a look…

Neat!

It’s nice to see Android have a standard for App Splash Screens built into the platform. Up until now, it was common practice to implement your own splash screen for your application. If you did implement a splash screen, it’s important to note any unintended side effects when upgrading your targetSdk to Android 12. Applications using a separate Activity for their splash screen without a properly configured theme may result in a user seeing 2 splash screens! 😳

Cheers to another Android release, and thanks for reading.

Happy coding!

P.S. 📣 Special thanks to Clint M. and Donovan L. for design help! 🙌

The post Splash Screens: The Final Right Way appeared first on Big Nerd Ranch.

]]>The post Coming soon: Kotlin Programming: The Big Nerd Ranch Guide, Second Edition appeared first on Big Nerd Ranch.

]]>**Updated on 10/5/21** The Kindle version of the book is now available on Amazon! We’ve updated this post to include the latest information about the book’s availability.

To help you adopt the language in your own projects and learn about these more advanced features, we’re excited to announce the second edition of our popular guide to Kotlin programming. Read on to learn about what’s changing in this latest installation!

Kotlin 1.5 & IntelliJ 2021.2

Kotlin 1.5 introduced language features for unsigned numeric types and value classes (formerly called inline classes). There are also a number of quality of life improvements that have been introduced since our first Kotlin guide, like better type inference, when expressions with variable declarations, and improvements to the standard library. The second edition of our Kotlin programming guide incorporates these new features and will guide you through writing idiomatic Kotlin code. We’ve also updated our guide for the latest version of IntelliJ IDEA, which has also gotten some power-ups in its Kotlin editing features.

Whether you’re coming to Kotlin for the first time or are brushing up your skillset, we think you’ll love these new features.

Coroutines

The kotlinx.coroutines library has changed drastically since we teased it in the first edition of our Kotlin programming guide. Right off the bat, Coroutines have graduated from experimental to stable and have become widely adopted in many production codebases.

We’ve also seen the introduction and adoption of Channels and Flows, which build on top of Coroutines to allow parts of your application to communicate more easily. The book has three brand-new dedicated chapters for Coroutines, Channels, and Flows.

Kotlin Multiplatform

Although many people think of Kotlin as a replacement for Java, that doesn’t paint the full picture. Kotlin is a multiplatform language and comes in three flavors: Kotlin/JVM, Kotlin/Native, and Kotlin/JS.

The last part of the book will give you a taste of Kotlin Multiplatform. We will walk you through how to make a single project that compiles into a Java executable, a native macOS application, and a JavaScript script for use on the web.

If you’ve been looking for a way to share code between platforms, give Kotlin Multiplatform a shot. We think you’ll be surprised at how much it can do for you. And if you want to learn more about Kotlin Multiplatform, may we point you in the direction of a few other blog posts?

On Virtual Shelves Now

Whether you’re an Android developer who wants to use Google’s recommended programming language, a web developer who wants a concise alternative to Java, a developer looking to share code between apps on different platforms, a new programmer looking to learn a modern programming language, or anyone in between, Kotlin is a great choice. We hope that you pick up our Kotlin programming guide and enjoy working with the language as much as we do at Big Nerd Ranch.

The Kindle version for the second edition of Kotlin Programming: The Big Nerd Ranch Guide is available now from Amazon and will be rolling out to other eBook retailers soon. Printed versions of the book are available for pre-order now and will ship in late October.

If you or your team would like the full Big Nerd Ranch experience, we offer instructor-led bootcamps where one of our expert instructors will teach you the Essentials of Kotlin. You can find more information on our books page. Also keep an eye on this blog and our social media for more updates from the Ranch.

Happy coding!

The post Coming soon: Kotlin Programming: The Big Nerd Ranch Guide, Second Edition appeared first on Big Nerd Ranch.

]]>The post Using StateFlow over LiveData for end-to-end operations appeared first on Big Nerd Ranch.

]]>on to find out why using StateFlow and Flows can be a more flexible and performant solution for observing data in all areas of your application.

Using StateFlow over LiveData Overview

LiveData is totally fine for the view layer of an application. We even get a handy LiveData extension on Flows for this very reason. However, the important thing to remember is that Flows and StateFlow are better for long-running tasks at lower levels of an application. They’ll allow us to manipulate data for final delivery to our UI without hitting the main thread on the way.

LiveData is still a great data holder class for lifecycle awareness. But therein lies the caveat – it’s a specific Android data structure for specific Android needs. If you’re following recommended architecture, you might notice that we’d want to keep lower levels of your application, such as a repository, free of any Android dependencies. LiveData would be a poor choice here because it is only readable on the main thread. This can be fine if you’re displaying data from a one-shot request, but imagine something more complex like having to pull data from multiple requests to populate a single object. Options like Transformations or MediatorLiveData still use the main thread for their executions. That won’t scale well for additional data mapping needs, putting our users on a one-way train straight to Jankland!

Using StateFlow

So what are the alternatives? Enter StateFlow. As Kotlin coroutines nudge into first-class status for handling async operations and Flows replace RxJava as the reactive solution for streams of data, we are seeing these pure Kotlin structures become a recommended way to emit values to subscribers.

I’ll demonstrate their use in a small sample app that leverages StateFlow for two use cases. First, we’ll see how it provides the latest values in a stream of data for an instant search feature. Then, I’ll show you how we can collect the results in a way that plays nicely with the lifecycle. The first thing we need is a snappy name – we’ll call it PupPeruser.

Fetching Pups

PupPeruser takes in search queries and launches requests to an API with each new character the user types. The API returns a list of photos matching our searched breed, and the app will find a random image in this list to show the user.

On top of the latest Android library for ViewModel, we are also makings sure to add implementation 'androidx.lifecycle:lifecycle-runtime-ktx:$latest_version' and implementation "org.jetbrains.kotlinx:kotlinx-coroutines-core:$latest_version" to our app’s Gradle file.

First, let’s take a look at how our state is represented:

sealed class PupImageState {

data class Success(val imageUrl: String) : PupImageState()

data class Error(val exception: Exception) : PupImageState()

object InProgress : PupImageState()

object InitialState: PupImageState()

}

Next, let’s check out our DogRepository, which contains our search query as a MutableStateFlow. Just like LiveData, we get both mutable and immutable types to control what is exposed. However, we need a value to initialize our mutable type, which is another benefit to Stateflow as it protects against nullability and forces us to consider initial states.

class DogRepository {

private val queryStateFlow = MutableStateFlow("")

fun setQuery(query: String){

queryStateFlow.value = query

}

}

While the syntax for setting a value property is like LiveData, these value updates are conflated. That means only the most recent value will ever be collected. This is backed by structural equality checks via Any.equals() – essentially this all just means that StateFlow will never emit the same two values in a row. This makes it a great structure to build search functionality from, since we’re A) always searching the latest value input by the user and B) not wasting network calls by repeating a search for the same query twice (not likely to happen with our instant search functionality, but useful if we wanted to launch searches with a button press instead).

Keeping in Flow

Now let’s see how we can get a reactive search function with queryStateFlow:

fun getPupImageFlow(): Flow<PupImageState>{

return queryStateFlow

.debounce(300)

.filter {it.isNotEmpty()}

.flatMapLatest {query ->

flow {

emit(getPupImage(query))

}.onStart {

emit(PupImageState.InProgress)

}.catch {

emit(PupImageState.Error(Exception("Sorry, there are no pups by that name. Keep looking!")))

}

}

.flowOn(Dispatchers.IO)

}

Let’s unpack the rest here. The chain of operators highlights that a huge benefit of using StateFlow over LiveData is that, well, it’s a Flow! We can apply operators throughout our stream of data, such as debounce() to control when the emission occurs and filter() to protect against wasteful API queries by halting the flow from moving downstream. But what is really important in terms of our threading is flatMapLatest() and flowOn().

The flatMapLatest operator transforms our initial flow, the query text, into a new flow that emits our PupImageState. The method getPupImage(query) makes our API call and returns a PupImageState.Success(imageUrl: String).

The flowOn() operator isn’t necessarily something we need for a one-shot request, but it shows another benefit of StateFlow in that we can designate what thread it executes on. Imagine a long-running operation inside flatMapLatest(), like inserting into a database. By switching to a separate dispatcher, we can ensure that it happens off the main thread.

Be careful, it’s hot!

One of the most important things to remember about StateFlow is that it is a hot observable, meaning it is able to emit states whether or not there is an active subscriber for it. This could be dangerous if not managed properly, as we could end up wasting battery, cellular data, or other system resources by making network calls while our app is in the background.

When interacting with our StateFlow in a higher layer such as our ViewModel or Activity, we can make several configurations to handle this behavior. Let’s first take a look at our MainViewModel.

class MainViewModel(private val repository: DogRepository): ViewModel() {

val mainStateFlow: StateFlow<PupImageState> = repository.getPupImageFlow()

.stateIn(viewModelScope, SharingStarted.WhileSubscribed(5000), PupImageState.InitialState)

fun performQuery(query: String){

repository.setQuery(query)

}

}

Our MainViewModel exposes an immutable StateFlow for collection in the MainActivity. It does this by taking the standard Flow from our DogRepository method and using the stateIn() operator to turn it into a StateFlow. The SharingStarted parameter is where we can configure how it emits state as a hot observable. While we have three options, the function that we want to pass in is SharingStarted.WhileSubscribed(stopTimeoutMillis: Long). This will cancel our upstream flow from producing values when it isn’t observed, and the timeout value keeps our process alive just long enough for a lifecycle recreation in an orientation change.

To further optimize, we’ll need to be aware of some things on the other side of the coin. And without further ado, our MainActivity!

class MainActivity : AppCompatActivity() {

private val viewModel: MainViewModel by viewModels { MainViewModelFactory(DogRepository()) }

private lateinit var binding: ActivityMainBinding

override fun onCreate(savedInstanceState: Bundle?) {

super.onCreate(savedInstanceState)

binding = ActivityMainBinding.inflate(layoutInflater)

setContentView(binding.root)

binding.editTextPupSearch.apply {

addTextChangedListener { editable ->

if (editable.toString().isEmpty()){

binding.textViewErrorText.isVisible = false

}

viewModel.performQuery(editable.toString().trim())

}

}

lifecycleScope.launch {

viewModel.mainStateFlow

.flowWithLifecycle(lifecycle, Lifecycle.State.STARTED)

.collect { state ->

if (state is PupImageState.Success) {

showImage(state.imageUrl)

} else if (state is PupImageState.Error){

binding.textViewErrorText.text = state.exception.message

}

binding.imageViewPupPicture.isVisible = state is PupImageState.Success

binding.progressBarLoading.isVisible = state is PupImageState.InProgress

binding.textViewErrorText.isVisible = state is PupImageState.Error

}

}

}

}

The collect() method here is known as a terminal operator, as it’s the call we make to actually start the flow and collect its result. It’s similar to how we’d use a LiveData Observer, although the difference is that we don’t get any lifecycle awareness by default. Luckily, we have a few options.

The flowWithLifecycle() operator is the best option for our simple case of collecting a single flow in the view layer. It will cancel the upstream flow if the lifecycle state falls below the designated level and restart once the lifecycle gets back to that level. This works by calling Lifecycle.repeatOnLifecycle() under the hood, which is the recommended way to collect multiple flows in parallel.